Peeling back the fastai layered AI with Fashion MNIST

Chapter 4 of the fastai book covers how to build a Neural Network for distinguishing 3s and 7s on MNIST from scratch. We’re going to do a similar thing but instead of building the neural network from the ground up we’re going to use fastai’s layered API to build it top down. We’ll start with the high level API to train a dense neural network in a few lines. Then we’ll redo the problem going deeper and deeper into the API. At the very core it’s mainly PyTorch, and we’ll have a pure PyTorch implementation like in the book. Then we’ll start rebuilding the abstractions from scratch to get a high level API like we started with.

Instead of using the MNIST digits we’ll use Fashion MNIST, which contains little black and white images of different types of clothing. This is a bit harder and a convolutional neural network would perform better here (as demonstrated in v3 of fastai course). But to keep things simple we’ll use a dense neural network.

This post was generated with a Jupyter notebook. You can also view this notebook on Kaggle or download the Jupyter notebook.

Training a model in 5 lines of code

We train a model to recognise these items of clothing from scratch in just 6 lines using fastai’s high level API. It should take a minute or two to run on a CPU (for such a small model and data there is marginal benefit running on a GPU).

# 1. Import

from fastai.tabular.all import *

# 2. Data

df = pd.read_csv('../input/fashionmnist/fashion-mnist_train.csv', dtype={'label':'category'})

# 3. Dataloader

dls = TabularDataLoaders.from_df(df, y_names='label', bs=4096, procs=[Normalize])

# 4. Learner

learn = tabular_learner(dls, layers=[100], opt_func=SGD, metrics=accuracy, config=dict(use_bn=False, bn_cont=False))

# 5. Fit

learn.fit(40, lr=0.2)| epoch | train_loss | valid_loss | accuracy | time |

|---|---|---|---|---|

| 0 | 1.204540 | 0.779914 | 0.735917 | 00:01 |

| 1 | 0.917599 | 0.632047 | 0.772750 | 00:01 |

| 2 | 0.787847 | 0.568341 | 0.791167 | 00:01 |

| 3 | 0.702365 | 0.522808 | 0.811083 | 00:01 |

| 4 | 0.642320 | 0.510324 | 0.811167 | 00:01 |

| 5 | 0.603035 | 0.491477 | 0.822083 | 00:01 |

| 6 | 0.568987 | 0.463034 | 0.831250 | 00:01 |

| 7 | 0.538885 | 0.449788 | 0.835583 | 00:01 |

| 8 | 0.514483 | 0.440531 | 0.839333 | 00:01 |

| 9 | 0.495511 | 0.436088 | 0.840583 | 00:01 |

| 10 | 0.479304 | 0.446928 | 0.833500 | 00:01 |

| 11 | 0.467378 | 0.419800 | 0.846667 | 00:01 |

| 12 | 0.453736 | 0.412330 | 0.851833 | 00:01 |

| 13 | 0.442266 | 0.409911 | 0.851667 | 00:01 |

| 14 | 0.432882 | 0.413216 | 0.849833 | 00:01 |

| 15 | 0.426103 | 0.408956 | 0.852667 | 00:01 |

| 16 | 0.417694 | 0.396635 | 0.858083 | 00:01 |

| 17 | 0.409261 | 0.394431 | 0.856333 | 00:01 |

| 18 | 0.402036 | 0.396497 | 0.856500 | 00:01 |

| 19 | 0.396574 | 0.393080 | 0.859083 | 00:01 |

| 20 | 0.391744 | 0.395087 | 0.857667 | 00:01 |

| 21 | 0.388619 | 0.405253 | 0.852083 | 00:01 |

| 22 | 0.383373 | 0.388774 | 0.859667 | 00:01 |

| 23 | 0.379811 | 0.391994 | 0.856500 | 00:01 |

| 24 | 0.376847 | 0.384142 | 0.861167 | 00:01 |

| 25 | 0.372048 | 0.376191 | 0.864167 | 00:01 |

| 26 | 0.368737 | 0.383891 | 0.858917 | 00:01 |

| 27 | 0.364437 | 0.380743 | 0.862833 | 00:01 |

| 28 | 0.362288 | 0.370025 | 0.865500 | 00:01 |

| 29 | 0.359911 | 0.370142 | 0.867500 | 00:01 |

| 30 | 0.357278 | 0.384656 | 0.859667 | 00:01 |

| 31 | 0.355596 | 0.364638 | 0.869250 | 00:01 |

| 32 | 0.351848 | 0.363354 | 0.868833 | 00:01 |

| 33 | 0.347400 | 0.362254 | 0.869667 | 00:01 |

| 34 | 0.343837 | 0.364124 | 0.869500 | 00:01 |

| 35 | 0.340539 | 0.362926 | 0.869583 | 00:01 |

| 36 | 0.339874 | 0.368416 | 0.866333 | 00:01 |

| 37 | 0.336479 | 0.369149 | 0.866917 | 00:01 |

| 38 | 0.335846 | 0.371058 | 0.865833 | 00:01 |

| 39 | 0.336446 | 0.383330 | 0.858583 | 00:01 |

We can then test the perormance on the test set; note that it’s very close to the accuracy from the last line of the training above.

df_test = pd.read_csv('../input/fashionmnist/fashion-mnist_test.csv', dtype={'label': df.label.dtype})

probs, actuals = learn.get_preds(dl=dls.test_dl(df_test))

print(f'Accuracy on test set {float(accuracy(probs, actuals)): 0.2%}')Accuracy on test set 85.88%Looking at the sklearn benchmarks on this dataset it’s outperformed by some other models such as Support Vector Machines (SV) with 89.7% accuracy, and Gradient Boosted Trees with 88.8% accuracy. In fact our model is almost the same as the MLPClassifier (87.7%). See if you can beat this baseline by changing the layers, learning rate, and number of epochs.

The best results on this dataset, around 92-96%, come from Convolutional Neural Networks (CNN). The kind of approach we use here can be extended to a CNN; the other kinds of models are quite different.

What did we just do?

Let’s go back through those 5 lines slowly to see what was going on.

1. Import

The first line imports all the libraries we need for tabular analysis.

This includes specific fastai libraries, as well as general utilities such as Pandas, numpy and PyTorch, and much more

from fastai.tabular.all import *If you want to see exactly what was imported you can look into the module or the source code.

import fastai.tabular.all

L(dir(fastai.tabular.all))(#846) ['APScoreBinary','APScoreMulti','AccumMetric','ActivationStats','Adam','AdaptiveAvgPool','AdaptiveConcatPool1d','AdaptiveConcatPool2d','ArrayBase','ArrayImage'...]This includes standard imports like “pandas as pd”

fastai.tabular.all.pd<module 'pandas' from '/opt/conda/lib/python3.7/site-packages/pandas/__init__.py'>2. Data

We read in the data from Pandas as a CSV, letting Pandas know that the label column is categorical.

df = pd.read_csv('../input/fashionmnist/fashion-mnist_train.csv', dtype={'label':'category'})The dataframe contains a label column giving the kind of image, and then 784 columns for the pixel value from 0-255.

df| label | pixel1 | pixel2 | pixel3 | pixel4 | pixel5 | pixel6 | pixel7 | pixel8 | pixel9 | … | pixel775 | pixel776 | pixel777 | pixel778 | pixel779 | pixel780 | pixel781 | pixel782 | pixel783 | pixel784 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | … | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | … | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 5 | 0 | … | 0 | 0 | 0 | 30 | 43 | 0 | 0 | 0 | 0 | 0 |

| 3 | 0 | 0 | 0 | 0 | 1 | 2 | 0 | 0 | 0 | 0 | … | 3 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | … | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| 59995 | 9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | … | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 59996 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | … | 73 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 59997 | 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | … | 160 | 162 | 163 | 135 | 94 | 0 | 0 | 0 | 0 | 0 |

| 59998 | 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | … | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 59999 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | … | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

60000 rows × 785 columns

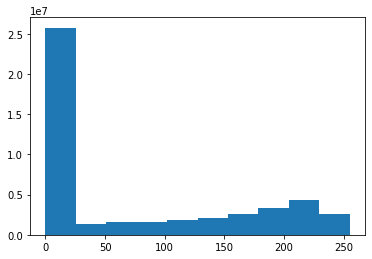

A histogram of the pixels shows they are mostly 0, with values up to 255.

_ = plt.hist(df.filter(like='pixel', axis=1).to_numpy().reshape(-1))

From a singe row of the dataframe we can read the label, and the pixels

label, *pixels = df.iloc[0]

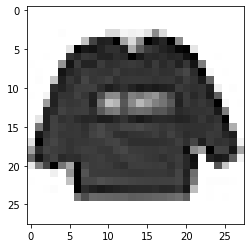

label, len(pixels)('2', 784)The 784 pixels are actually 28 rows of the image, each containing 28 columns. If we rearrange them we can plot it as an image.

image_array = np.array(pixels).reshape(28, 28)

_ = plt.imshow(image_array, cmap='Greys')

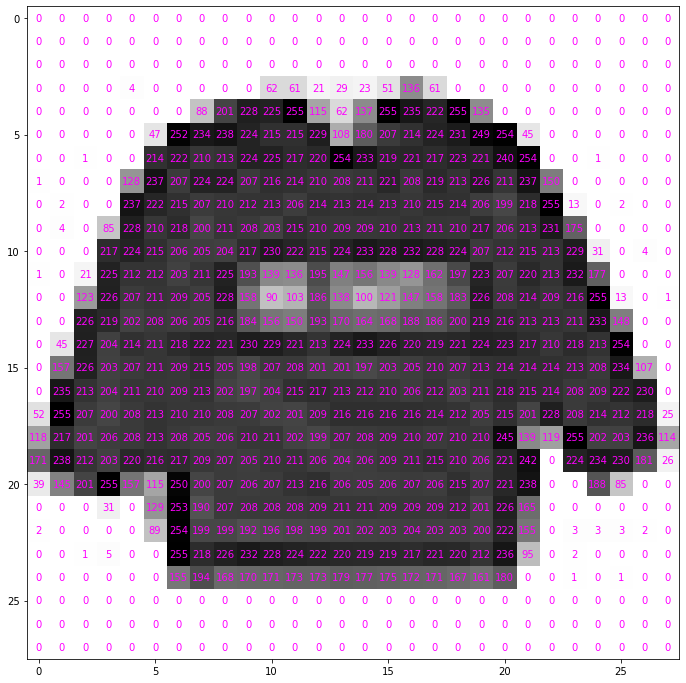

All we are seeing here are the pixel intensities from 0 (white) to 255 (black) on a grid.

fig, ax = plt.subplots(figsize=(12,12))

im = ax.imshow(image_array, cmap="Greys")

for i in range(image_array.shape[0]):

for j in range(image_array.shape[1]):

text = ax.text(j, i, image_array[i, j], ha="center", va="center", color="magenta")

The labels are categorical codes for different types of clothing.

We can copy the label description and convert it into a Python dictionary.

labels_txt = """

Label Description

0 T-shirt/top

1 Trouser

2 Pullover

3 Dress

4 Coat

5 Sandal

6 Shirt

7 Sneaker

8 Bag

9 Ankle boot

""".strip()

labels = dict([row.split('\t') for row in labels_txt.split('\n')[1:]])

labels{'0': 'T-shirt/top',

'1': 'Trouser',

'2': 'Pullover',

'3': 'Dress',

'4': 'Coat',

'5': 'Sandal',

'6': 'Shirt',

'7': 'Sneaker',

'8': 'Bag',

'9': 'Ankle boot'}The image above is of a Pullover

label, labels[label]('2', 'Pullover')We’ve got 6000 images of each type.

df.label.map(labels).value_counts()T-shirt/top 6000

Trouser 6000

Pullover 6000

Dress 6000

Coat 6000

Sandal 6000

Shirt 6000

Sneaker 6000

Bag 6000

Ankle boot 6000

Name: label, dtype: int643. Dataloader

Now we have our raw data we need a way to pass that into the model in a way it understands. We do this with a DataLoader reading from the dataframe. We need to tell it:

- df: the dataframe to read from

- y_names: the name of the column containing the outcome variable, here

label - bs: the batch size, how many rows to feed to the model each time. We use 4096 because the data and models are small

- procs: any preprocessing steps to do, here we use

Normalizeto map them from 0-255 to a more reasonable range. - cont_names: The name of the continuous columns

Note that before we didn’t pass cont_names and it automatically detected them; however it can reorder the columns so we specify it here for clarity.

dls = TabularDataLoaders.from_df(df, y_names='label', bs=4096, procs=[Normalize], cont_names=list(df.columns[1:]))This data loader can then produce the pixel arrays for a subset of rows, and the outcome labels on demand. Note these are the values before using procs.

dls.show_batch()| pixel1 | pixel2 | pixel3 | pixel4 | pixel5 | pixel6 | pixel7 | pixel8 | pixel9 | pixel10 | pixel11 | pixel12 | pixel13 | pixel14 | pixel15 | pixel16 | pixel17 | pixel18 | pixel19 | pixel20 | pixel21 | pixel22 | pixel23 | pixel24 | pixel25 | pixel26 | pixel27 | pixel28 | pixel29 | pixel30 | pixel31 | pixel32 | pixel33 | pixel34 | pixel35 | pixel36 | pixel37 | pixel38 | pixel39 | pixel40 | pixel41 | pixel42 | pixel43 | pixel44 | pixel45 | pixel46 | pixel47 | pixel48 | pixel49 | pixel50 | pixel51 | pixel52 | pixel53 | pixel54 | pixel55 | pixel56 | pixel57 | pixel58 | pixel59 | pixel60 | pixel61 | pixel62 | pixel63 | pixel64 | pixel65 | pixel66 | pixel67 | pixel68 | pixel69 | pixel70 | pixel71 | pixel72 | pixel73 | pixel74 | pixel75 | pixel76 | pixel77 | pixel78 | pixel79 | pixel80 | pixel81 | pixel82 | pixel83 | pixel84 | pixel85 | pixel86 | pixel87 | pixel88 | pixel89 | pixel90 | pixel91 | pixel92 | pixel93 | pixel94 | pixel95 | pixel96 | pixel97 | pixel98 | pixel99 | pixel100 | pixel101 | pixel102 | pixel103 | pixel104 | pixel105 | pixel106 | pixel107 | pixel108 | pixel109 | pixel110 | pixel111 | pixel112 | pixel113 | pixel114 | pixel115 | pixel116 | pixel117 | pixel118 | pixel119 | pixel120 | pixel121 | pixel122 | pixel123 | pixel124 | pixel125 | pixel126 | pixel127 | pixel128 | pixel129 | pixel130 | pixel131 | pixel132 | pixel133 | pixel134 | pixel135 | pixel136 | pixel137 | pixel138 | pixel139 | pixel140 | pixel141 | pixel142 | pixel143 | pixel144 | pixel145 | pixel146 | pixel147 | pixel148 | pixel149 | pixel150 | pixel151 | pixel152 | pixel153 | pixel154 | pixel155 | pixel156 | pixel157 | pixel158 | pixel159 | pixel160 | pixel161 | pixel162 | pixel163 | pixel164 | pixel165 | pixel166 | pixel167 | pixel168 | pixel169 | pixel170 | pixel171 | pixel172 | pixel173 | pixel174 | pixel175 | pixel176 | pixel177 | pixel178 | pixel179 | pixel180 | pixel181 | pixel182 | pixel183 | pixel184 | pixel185 | pixel186 | pixel187 | pixel188 | pixel189 | pixel190 | pixel191 | pixel192 | pixel193 | pixel194 | pixel195 | pixel196 | pixel197 | pixel198 | pixel199 | pixel200 | pixel201 | pixel202 | pixel203 | pixel204 | pixel205 | pixel206 | pixel207 | pixel208 | pixel209 | pixel210 | pixel211 | pixel212 | pixel213 | pixel214 | pixel215 | pixel216 | pixel217 | pixel218 | pixel219 | pixel220 | pixel221 | pixel222 | pixel223 | pixel224 | pixel225 | pixel226 | pixel227 | pixel228 | pixel229 | pixel230 | pixel231 | pixel232 | pixel233 | pixel234 | pixel235 | pixel236 | pixel237 | pixel238 | pixel239 | pixel240 | pixel241 | pixel242 | pixel243 | pixel244 | pixel245 | pixel246 | pixel247 | pixel248 | pixel249 | pixel250 | pixel251 | pixel252 | pixel253 | pixel254 | pixel255 | pixel256 | pixel257 | pixel258 | pixel259 | pixel260 | pixel261 | pixel262 | pixel263 | pixel264 | pixel265 | pixel266 | pixel267 | pixel268 | pixel269 | pixel270 | pixel271 | pixel272 | pixel273 | pixel274 | pixel275 | pixel276 | pixel277 | pixel278 | pixel279 | pixel280 | pixel281 | pixel282 | pixel283 | pixel284 | pixel285 | pixel286 | pixel287 | pixel288 | pixel289 | pixel290 | pixel291 | pixel292 | pixel293 | pixel294 | pixel295 | pixel296 | pixel297 | pixel298 | pixel299 | pixel300 | pixel301 | pixel302 | pixel303 | pixel304 | pixel305 | pixel306 | pixel307 | pixel308 | pixel309 | pixel310 | pixel311 | pixel312 | pixel313 | pixel314 | pixel315 | pixel316 | pixel317 | pixel318 | pixel319 | pixel320 | pixel321 | pixel322 | pixel323 | pixel324 | pixel325 | pixel326 | pixel327 | pixel328 | pixel329 | pixel330 | pixel331 | pixel332 | pixel333 | pixel334 | pixel335 | pixel336 | pixel337 | pixel338 | pixel339 | pixel340 | pixel341 | pixel342 | pixel343 | pixel344 | pixel345 | pixel346 | pixel347 | pixel348 | pixel349 | pixel350 | pixel351 | pixel352 | pixel353 | pixel354 | pixel355 | pixel356 | pixel357 | pixel358 | pixel359 | pixel360 | pixel361 | pixel362 | pixel363 | pixel364 | pixel365 | pixel366 | pixel367 | pixel368 | pixel369 | pixel370 | pixel371 | pixel372 | pixel373 | pixel374 | pixel375 | pixel376 | pixel377 | pixel378 | pixel379 | pixel380 | pixel381 | pixel382 | pixel383 | pixel384 | pixel385 | pixel386 | pixel387 | pixel388 | pixel389 | pixel390 | pixel391 | pixel392 | pixel393 | pixel394 | pixel395 | pixel396 | pixel397 | pixel398 | pixel399 | pixel400 | pixel401 | pixel402 | pixel403 | pixel404 | pixel405 | pixel406 | pixel407 | pixel408 | pixel409 | pixel410 | pixel411 | pixel412 | pixel413 | pixel414 | pixel415 | pixel416 | pixel417 | pixel418 | pixel419 | pixel420 | pixel421 | pixel422 | pixel423 | pixel424 | pixel425 | pixel426 | pixel427 | pixel428 | pixel429 | pixel430 | pixel431 | pixel432 | pixel433 | pixel434 | pixel435 | pixel436 | pixel437 | pixel438 | pixel439 | pixel440 | pixel441 | pixel442 | pixel443 | pixel444 | pixel445 | pixel446 | pixel447 | pixel448 | pixel449 | pixel450 | pixel451 | pixel452 | pixel453 | pixel454 | pixel455 | pixel456 | pixel457 | pixel458 | pixel459 | pixel460 | pixel461 | pixel462 | pixel463 | pixel464 | pixel465 | pixel466 | pixel467 | pixel468 | pixel469 | pixel470 | pixel471 | pixel472 | pixel473 | pixel474 | pixel475 | pixel476 | pixel477 | pixel478 | pixel479 | pixel480 | pixel481 | pixel482 | pixel483 | pixel484 | pixel485 | pixel486 | pixel487 | pixel488 | pixel489 | pixel490 | pixel491 | pixel492 | pixel493 | pixel494 | pixel495 | pixel496 | pixel497 | pixel498 | pixel499 | pixel500 | pixel501 | pixel502 | pixel503 | pixel504 | pixel505 | pixel506 | pixel507 | pixel508 | pixel509 | pixel510 | pixel511 | pixel512 | pixel513 | pixel514 | pixel515 | pixel516 | pixel517 | pixel518 | pixel519 | pixel520 | pixel521 | pixel522 | pixel523 | pixel524 | pixel525 | pixel526 | pixel527 | pixel528 | pixel529 | pixel530 | pixel531 | pixel532 | pixel533 | pixel534 | pixel535 | pixel536 | pixel537 | pixel538 | pixel539 | pixel540 | pixel541 | pixel542 | pixel543 | pixel544 | pixel545 | pixel546 | pixel547 | pixel548 | pixel549 | pixel550 | pixel551 | pixel552 | pixel553 | pixel554 | pixel555 | pixel556 | pixel557 | pixel558 | pixel559 | pixel560 | pixel561 | pixel562 | pixel563 | pixel564 | pixel565 | pixel566 | pixel567 | pixel568 | pixel569 | pixel570 | pixel571 | pixel572 | pixel573 | pixel574 | pixel575 | pixel576 | pixel577 | pixel578 | pixel579 | pixel580 | pixel581 | pixel582 | pixel583 | pixel584 | pixel585 | pixel586 | pixel587 | pixel588 | pixel589 | pixel590 | pixel591 | pixel592 | pixel593 | pixel594 | pixel595 | pixel596 | pixel597 | pixel598 | pixel599 | pixel600 | pixel601 | pixel602 | pixel603 | pixel604 | pixel605 | pixel606 | pixel607 | pixel608 | pixel609 | pixel610 | pixel611 | pixel612 | pixel613 | pixel614 | pixel615 | pixel616 | pixel617 | pixel618 | pixel619 | pixel620 | pixel621 | pixel622 | pixel623 | pixel624 | pixel625 | pixel626 | pixel627 | pixel628 | pixel629 | pixel630 | pixel631 | pixel632 | pixel633 | pixel634 | pixel635 | pixel636 | pixel637 | pixel638 | pixel639 | pixel640 | pixel641 | pixel642 | pixel643 | pixel644 | pixel645 | pixel646 | pixel647 | pixel648 | pixel649 | pixel650 | pixel651 | pixel652 | pixel653 | pixel654 | pixel655 | pixel656 | pixel657 | pixel658 | pixel659 | pixel660 | pixel661 | pixel662 | pixel663 | pixel664 | pixel665 | pixel666 | pixel667 | pixel668 | pixel669 | pixel670 | pixel671 | pixel672 | pixel673 | pixel674 | pixel675 | pixel676 | pixel677 | pixel678 | pixel679 | pixel680 | pixel681 | pixel682 | pixel683 | pixel684 | pixel685 | pixel686 | pixel687 | pixel688 | pixel689 | pixel690 | pixel691 | pixel692 | pixel693 | pixel694 | pixel695 | pixel696 | pixel697 | pixel698 | pixel699 | pixel700 | pixel701 | pixel702 | pixel703 | pixel704 | pixel705 | pixel706 | pixel707 | pixel708 | pixel709 | pixel710 | pixel711 | pixel712 | pixel713 | pixel714 | pixel715 | pixel716 | pixel717 | pixel718 | pixel719 | pixel720 | pixel721 | pixel722 | pixel723 | pixel724 | pixel725 | pixel726 | pixel727 | pixel728 | pixel729 | pixel730 | pixel731 | pixel732 | pixel733 | pixel734 | pixel735 | pixel736 | pixel737 | pixel738 | pixel739 | pixel740 | pixel741 | pixel742 | pixel743 | pixel744 | pixel745 | pixel746 | pixel747 | pixel748 | pixel749 | pixel750 | pixel751 | pixel752 | pixel753 | pixel754 | pixel755 | pixel756 | pixel757 | pixel758 | pixel759 | pixel760 | pixel761 | pixel762 | pixel763 | pixel764 | pixel765 | pixel766 | pixel767 | pixel768 | pixel769 | pixel770 | pixel771 | pixel772 | pixel773 | pixel774 | pixel775 | pixel776 | pixel777 | pixel778 | pixel779 | pixel780 | pixel781 | pixel782 | pixel783 | pixel784 | label | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | -1.628456e-11 | 2.116344e-10 | -1.459979e-10 | 2.426657e-09 | -2.665179e-11 | -2.594887e-09 | -2.792910e-08 | -3.333367e-08 | 8.642878e-08 | -4.371375e-07 | 9.682471e-07 | -0.000002 | -5.505288e-07 | 4.053924e-07 | 4.035032e-07 | 2.956210e-07 | 4.005417e-07 | -0.000001 | 5.516976e-08 | -2.068072e-07 | -9.467687e-08 | 9.006170e-08 | -2.751660e-08 | -2.676011e-08 | 1.534132e-09 | 3.965276e-09 | 4.171343e-09 | 5.597892e-10 | -2.459002e-10 | 1.018412e-09 | 4.727461e-09 | 4.497033e-09 | -4.694904e-09 | 8.493365e-08 | -8.031146e-08 | 1.985495e-07 | -1.276844e-07 | -0.000001 | 7.044741e-07 | -0.000002 | -0.000002 | -7.585758e-07 | -0.000006 | 0.000006 | 0.000002 | -1.279166e-07 | 0.000001 | -9.767781e-07 | -6.219337e-07 | 1.444539e-07 | -1.736492e-07 | -8.071513e-09 | -1.345314e-08 | -3.650504e-08 | 3.064484e-09 | 1.395653e-09 | -5.678459e-10 | -8.652231e-10 | 6.095701e-09 | -2.268324e-08 | 1.082306e-07 | 2.055711e-07 | 4.611180e-07 | 3.908779e-07 | 0.000002 | -0.000002 | 0.000002 | 0.000001 | -0.000003 | 0.000003 | -4.594104e-07 | 0.000004 | 0.000005 | 0.000001 | -4.233165e-07 | 0.000002 | -0.000002 | 7.057845e-07 | -1.318873e-07 | -1.672773e-07 | 2.125031e-09 | -8.742525e-08 | -1.006425e-08 | -5.139340e-09 | 2.001768e-09 | -9.364624e-10 | -1.518954e-08 | -4.168295e-08 | 1.493545e-07 | 7.656082e-07 | -3.514349e-07 | -9.688781e-07 | 8.386749e-07 | 0.000002 | 0.000005 | 0.000004 | -0.000003 | -0.000003 | -0.000003 | -0.000004 | -6.836428e-07 | -3.299791e-07 | -0.000005 | 0.000002 | 5.257434e-07 | 8.999998e+00 | 6.600000e+01 | 9.500000e+01 | 9.700000e+01 | 1.000000e+01 | -4.949160e-09 | 1.000000e+00 | 2.329049e-09 | -5.676910e-09 | -1.800060e-08 | -3.427830e-08 | -5.326159e-07 | 2.273738e-07 | 6.555034e-07 | -0.000001 | -0.000002 | 0.000002 | 2.000001 | 0.000003 | 10.000000 | 1.880000e+02 | 2.170000e+02 | 238.000000 | 250.000003 | 251.000002 | 2.550000e+02 | 218.000003 | 2.290000e+02 | 2.550000e+02 | 2.550000e+02 | 2.360000e+02 | 2.550000e+02 | 1.420000e+02 | -1.021340e-08 | 2.000000e+00 | -2.561862e-09 | -4.695927e-08 | 4.582200e-08 | -3.727119e-08 | 1.100016e-07 | 0.000002 | -0.000002 | 0.000001 | -0.000002 | -0.000005 | 9.999999 | 6.513797e-07 | 70.999999 | 255.000005 | 236.999996 | 236.000001 | 236.999995 | 229.000004 | 225.000003 | 234.999999 | 2.310000e+02 | 224.000001 | 2.240000e+02 | 2.190000e+02 | 2.440000e+02 | 1.430000e+02 | 1.621610e-07 | 1.000000e+00 | -4.618929e-09 | 4.971934e-09 | 6.497929e-08 | 2.643760e-07 | -2.410009e-07 | -0.000002 | 0.000002 | 0.000001 | -8.460442e-08 | 0.000003 | 6.000000e+00 | -0.000002 | 58.000001 | 246.999997 | 228.000003 | 232.999999 | 233.999998 | 227.999995 | 227.000000 | 232.000000 | 220.999996 | 2.280000e+02 | 239.000001 | 2.310000e+02 | 2.450000e+02 | 1.570000e+02 | -4.032686e-07 | -1.347659e-08 | -2.890140e-08 | 1.131168e-07 | -4.175154e-08 | -5.971974e-07 | 3.715096e-07 | 0.000001 | 0.000001 | -0.000001 | 0.000001 | -0.000004 | 3.999998e+00 | -0.000004 | 1.440000e+02 | 248.999999 | 228.000004 | 241.999999 | 240.999997 | 240.000003 | 237.999997 | 242.999999 | 238.000004 | 234.000004 | 240.000001 | 230.999996 | 2.460000e+02 | 2.150000e+02 | -6.205741e-07 | -9.954905e-08 | 2.976238e-08 | -9.164984e-08 | -1.933697e-07 | -3.981918e-07 | 4.334051e-07 | -0.000002 | 7.406876e-07 | -0.000003 | -9.455209e-07 | 0.000004 | 0.000004 | -0.000002 | 154.999998 | 246.999996 | 217.000003 | 230.000003 | 228.000001 | 228.000003 | 229.000003 | 223.999996 | 2.300000e+02 | 229.999996 | 237.999998 | 220.000002 | 244.999993 | 2.220000e+02 | -8.944622e-08 | 9.377016e-08 | -2.152347e-08 | -9.805354e-08 | 1.100760e-07 | -1.284166e-07 | -8.785177e-07 | -5.893633e-07 | 9.804652e-07 | 0.000001 | -0.000003 | -0.000005 | -0.000004 | -0.000004 | 183.999997 | 250.000004 | 2.260000e+02 | 232.000004 | 228.999998 | 228.000002 | 226.999997 | 221.999995 | 229.000000 | 234.000001 | 231.999997 | 228.000004 | 2.410000e+02 | 2.440000e+02 | 3.626715e-07 | 7.653776e-08 | 3.391509e-08 | -1.837763e-07 | -1.002178e-07 | 9.562319e-08 | 5.449854e-07 | 6.690875e-07 | 0.000002 | 0.000003 | -0.000002 | 0.000003 | -0.000003 | 0.000002 | 254.999999 | 241.000002 | 229.000004 | 233.999998 | 235.999997 | 236.000002 | 233.999998 | 231.000000 | 2.350000e+02 | 239.000004 | 234.999997 | 2.370000e+02 | 230.999995 | 255.000001 | 1.000000e+02 | 1.421396e-07 | -3.134657e-08 | -1.488990e-07 | -2.217518e-07 | -7.524475e-07 | -8.247956e-07 | 2.124748e-07 | -0.000002 | 0.000002 | -0.000002 | 1.999999 | 0.000005 | 29.000004 | 254.999996 | 229.999997 | 237.000003 | 234.000000 | 236.999997 | 238.000002 | 236.000000 | 235.000003 | 235.999998 | 232.000001 | 236.999995 | 237.000003 | 229.000001 | 2.390000e+02 | 226.999998 | 3.276677e-07 | -1.048992e-07 | -9.582211e-08 | -1.728624e-07 | 4.348013e-07 | 0.000001 | 0.000002 | 0.000001 | 0.000003 | 0.000002 | 0.000002 | -0.000004 | 176.999998 | 249.999996 | 225.999996 | 2.370000e+02 | 233.000003 | 234.999999 | 237.000003 | 234.999996 | 233.000005 | 236.000004 | 235.999998 | 235.000000 | 233.000000 | 230.000003 | 226.000004 | 255.000000 | 2.700000e+01 | -1.249524e-07 | 8.047429e-10 | 1.957992e-07 | -5.361049e-07 | 0.000001 | 0.000002 | -7.203892e-07 | 0.000001 | 3.000000 | 0.000001 | 24.999996 | 254.999996 | 230.999999 | 245.000004 | 234.999997 | 234.000001 | 232.000003 | 235.999999 | 235.999998 | 233.999996 | 2.370000e+02 | 235.999995 | 235.999998 | 230.000004 | 227.999997 | 2.210000e+02 | 254.999991 | 9.300000e+01 | 5.450883e-08 | -1.799488e-07 | -2.672337e-07 | 3.815783e-07 | 0.000002 | -8.765240e-07 | 0.000002 | 0.000005 | -0.000001 | 0.000003 | 201.999999 | 243.999999 | 226.000003 | 2.350000e+02 | 237.000004 | 234.000001 | 230.999999 | 233.999997 | 235.000000 | 232.000001 | 234.999997 | 2.350000e+02 | 2.300000e+02 | 226.000000 | 224.999997 | 2.210000e+02 | 255.000004 | 1.510000e+02 | 3.196277e-07 | 5.418402e-08 | -2.917852e-07 | 7.308653e-07 | -0.000002 | 1.000003 | 1.999999e+00 | 0.000004 | 0.000005 | 1.070000e+02 | 254.999997 | 231.999999 | 232.999999 | 231.000002 | 233.999996 | 236.000002 | 232.999996 | 233.000004 | 231.000002 | 229.999999 | 234.000000 | 232.999996 | 2.280000e+02 | 229.999995 | 231.999996 | 2.230000e+02 | 2.550000e+02 | 1.730000e+02 | -2.135472e-07 | 1.000000e+00 | 5.000000e+00 | 8.000000 | -5.332055e-07 | 5.139264e-07 | -0.000002 | -0.000004 | 74.000001 | 254.999999 | 231.999998 | 229.000002 | 233.000003 | 232.999999 | 232.999998 | 234.999997 | 232.999999 | 232.000003 | 232.999998 | 2.280000e+02 | 223.000004 | 2.330000e+02 | 228.000000 | 227.000001 | 229.000000 | 220.000000 | 2.550000e+02 | 1.340000e+02 | -1.681014e-07 | 6.363970e-07 | -6.975870e-07 | -5.423990e-07 | 0.000001 | 0.000002 | 68.999999 | 197.999998 | 254.999996 | 237.000001 | 229.000001 | 231.000004 | 233.000000 | 231.999996 | 233.999999 | 2.340000e+02 | 234.000004 | 230.999996 | 231.000002 | 2.280000e+02 | 2.310000e+02 | 2.230000e+02 | 227.999996 | 231.999995 | 2.380000e+02 | 2.330000e+02 | 255.000009 | 2.000000e+00 | -5.947502e-08 | -2.880678e-07 | 74.999998 | 1.330000e+02 | 196.000003 | 245.999997 | 254.999999 | 244.000001 | 227.999995 | 230.999998 | 231.999996 | 233.000002 | 237.000000 | 232.999998 | 234.000001 | 233.999996 | 232.999998 | 233.000000 | 230.000000 | 222.000002 | 227.000002 | 250.999998 | 246.000005 | 2.320000e+02 | 2.200000e+02 | 232.999997 | 1.860000e+02 | -3.012789e-07 | 6.600000e+01 | 2.280000e+02 | 2.410000e+02 | 2.420000e+02 | 2.370000e+02 | 2.350000e+02 | 224.999999 | 211.999995 | 2.300000e+02 | 232.000002 | 233.000002 | 235.000004 | 235.999999 | 232.000002 | 234.000000 | 2.340000e+02 | 235.999999 | 226.000000 | 227.000003 | 254.999995 | 254.999998 | 197.999995 | 215.000005 | 202.000000 | 186.999999 | 2.360000e+02 | 140.000000 | 4.011770e-07 | 1.750000e+02 | 2.470000e+02 | 2.140000e+02 | 224.000000 | 222.000002 | 221.000005 | 2.280000e+02 | 233.000004 | 2.310000e+02 | 2.330000e+02 | 231.000003 | 231.000000 | 229.999996 | 230.999999 | 234.999996 | 236.000004 | 227.000001 | 235.999997 | 255.000001 | 217.000005 | 17.000001 | -2.610268e-07 | 204.999999 | 220.000001 | 204.999997 | 218.000003 | 7.500000e+01 | -1.202886e-08 | 9.600000e+01 | 2.540000e+02 | 2.380000e+02 | 2.210000e+02 | 2.290000e+02 | 222.999997 | 225.999995 | 2.320000e+02 | 2.300000e+02 | 231.000001 | 2.310000e+02 | 2.350000e+02 | 236.000004 | 237.999995 | 224.000005 | 230.000000 | 2.410000e+02 | 254.999998 | 78.000001 | 0.000004 | 6.923674e-07 | 0.000001 | 2.170000e+02 | 2.070000e+02 | 1.980000e+02 | 211.999995 | 2.700000e+01 | -2.188508e-07 | 1.379810e-07 | 9.100000e+01 | 2.550000e+02 | 2.520000e+02 | 243.999992 | 235.000002 | 2.190000e+02 | 2.200000e+02 | 224.000004 | 2.270000e+02 | 232.000003 | 235.000003 | 224.999995 | 2.230000e+02 | 234.000001 | 254.999996 | 2.000000e+02 | 0.000005 | -0.000004 | -0.000004 | 4.021377e-07 | 0.000002 | 187.000001 | 216.000000 | 2.200000e+02 | 2.150000e+02 | 5.000000e+00 | 5.295760e-08 | -1.867194e-08 | -2.868018e-07 | -7.280360e-07 | 9.100000e+01 | 199.999998 | 2.550000e+02 | 255.000003 | 2.550000e+02 | 253.999999 | 245.000000 | 233.999998 | 235.999999 | 243.999996 | 254.999996 | 255.000000 | 1.170000e+02 | 0.000003 | -0.000003 | 0.999999 | 0.000002 | -0.000002 | 0.000001 | 209.999999 | 2.060000e+02 | 1.810000e+02 | 1.470000e+02 | 5.969346e-07 | 4.756745e-08 | -9.525822e-08 | 7.000000e+00 | -3.057516e-08 | -7.851076e-07 | -8.448512e-07 | 0.000002 | 49.000000 | 1.160000e+02 | 1.810000e+02 | 208.999997 | 2.260000e+02 | 223.000000 | 205.999999 | 139.999999 | -0.000001 | 8.076585e-07 | -0.000005 | 3.000001 | 0.000005 | -1.852046e-07 | 6.976350e-08 | 0.000002 | -0.000002 | -0.000001 | 0.000002 | 5.335659e-07 | -5.266864e-07 | 8.280237e-08 | 3.033599e-08 | 5.283691e-08 | 1.380712e-07 | 1.159922e-07 | -0.000001 | -0.000002 | 0.000003 | -3.723668e-07 | -0.000002 | 7.009453e-07 | -0.000002 | 3.933536e-07 | -0.000002 | -0.000002 | -0.000001 | 0.000002 | 0.000002 | -0.000005 | -5.581073e-07 | 0.000002 | -0.000002 | -0.000001 | 2.139088e-07 | 0.000002 | -5.421757e-07 | 6.163737e-07 | -5.578727e-08 | 4.793912e-09 | -4.510589e-09 | -9.451520e-09 | -1.198971e-07 | -2.364927e-08 | -9.384266e-07 | -8.592667e-07 | 0.000002 | -7.577064e-07 | -0.000002 | 0.000001 | 0.000003 | 0.000002 | -0.000002 | 0.000002 | -0.000003 | 0.000001 | -0.000003 | 0.000001 | -0.000002 | -0.000002 | -0.000001 | 0.000002 | -0.000002 | -0.000002 | 4.965935e-07 | -2.344399e-07 | -1.488622e-08 | 1.608706e-08 | -3.893886e-11 | -3.305654e-09 | -5.438611e-09 | -4.320514e-08 | -2.151095e-07 | 3.743793e-07 | -6.565379e-07 | 6.560168e-08 | 2.924545e-07 | -2.304249e-08 | 9.522988e-07 | -3.426212e-07 | -6.002562e-07 | -3.102456e-07 | 8.776177e-07 | -0.000002 | 6.155732e-07 | -3.593068e-07 | 0.000002 | 3.771737e-07 | 5.207580e-07 | -1.732680e-07 | -6.984834e-07 | -2.398092e-07 | 2.374313e-07 | -4.985816e-08 | -2.682765e-10 | -2.551856e-09 | 9 |

| 1 | -1.628456e-11 | 2.116344e-10 | -1.459979e-10 | 2.426657e-09 | -2.665179e-11 | -2.594887e-09 | -2.792910e-08 | 3.400000e+01 | 4.500000e+01 | 1.650000e+02 | 1.480000e+02 | 34.000000 | -5.505288e-07 | 4.053924e-07 | 4.035032e-07 | 2.956210e-07 | 6.300000e+01 | 190.000002 | 1.390000e+02 | 2.600000e+01 | 2.800000e+01 | 9.006170e-08 | -2.751660e-08 | -2.676011e-08 | 1.534132e-09 | 3.965276e-09 | 4.171343e-09 | 5.597892e-10 | -2.459002e-10 | 1.018412e-09 | 4.727461e-09 | 2.000000e+00 | -4.694904e-09 | 8.493365e-08 | 1.070000e+02 | 1.310000e+02 | 6.400000e+01 | 151.000002 | 2.290000e+02 | 226.000002 | 219.999994 | 2.410000e+02 | 236.999998 | 243.000004 | 255.000000 | 1.950000e+02 | 92.000000 | 9.000000e+01 | 1.030000e+02 | 9.000000e+01 | 8.000000e+00 | -8.071513e-09 | 1.000000e+00 | -3.650504e-08 | 3.064484e-09 | 1.395653e-09 | -5.678459e-10 | -8.652231e-10 | 6.095701e-09 | -2.268324e-08 | 1.082306e-07 | 1.040000e+02 | 9.000000e+01 | 5.200000e+01 | 61.000000 | 76.000000 | 172.000000 | 183.999998 | 197.000003 | 191.000000 | 1.880000e+02 | 169.000002 | 160.000000 | 128.999999 | 7.000000e+01 | 88.000001 | 52.000000 | 8.500000e+01 | 7.700000e+01 | -1.672773e-07 | 2.125031e-09 | -8.742525e-08 | -1.006425e-08 | -5.139340e-09 | 2.001768e-09 | -9.364624e-10 | -1.518954e-08 | -4.168295e-08 | 1.700000e+01 | 8.900000e+01 | 5.700000e+01 | 5.700000e+01 | 7.000000e+01 | 59.000001 | 127.000000 | 184.999999 | 182.000001 | 179.000002 | 168.999998 | 163.000000 | 1.710000e+02 | 8.900000e+01 | 85.000000 | 77.000000 | 5.900000e+01 | 5.700000e+01 | 8.100000e+01 | 8.000000e+00 | -1.558985e-07 | -1.001893e-07 | -4.949160e-09 | 1.981593e-08 | 2.329049e-09 | -5.676910e-09 | -1.800060e-08 | -3.427830e-08 | 5.000000e+01 | 6.700000e+01 | 6.400000e+01 | 65.000000 | 65.000000 | 78.000000 | 69.000000 | 139.000001 | 215.000005 | 1.780000e+02 | 1.960000e+02 | 177.000000 | 81.999999 | 83.000001 | 8.200000e+01 | 65.999999 | 5.900000e+01 | 6.000000e+01 | 8.800000e+01 | 4.100000e+01 | -4.548926e-07 | 1.847391e-08 | -1.021340e-08 | 1.077761e-08 | -2.561862e-09 | -4.695927e-08 | 4.582200e-08 | -3.727119e-08 | 7.200000e+01 | 69.000000 | 67.000001 | 64.000000 | 67.000000 | 58.999999 | 66.999999 | 5.800000e+01 | 91.000000 | 177.000000 | 116.000000 | 35.000001 | 52.999998 | 71.000001 | 58.000002 | 63.000000 | 5.700000e+01 | 72.000000 | 6.400000e+01 | 7.300000e+01 | -1.955981e-07 | 1.345243e-07 | 1.621610e-07 | 1.430707e-08 | -4.618929e-09 | 4.971934e-09 | 6.497929e-08 | 2.000000e+00 | 8.400000e+01 | 60.000001 | 69.000000 | 60.000000 | 6.100000e+01 | 56.000001 | 5.200000e+01 | 68.999999 | 55.999999 | 76.000000 | 58.000000 | 40.000001 | 56.999998 | 54.000001 | 45.000001 | 51.000001 | 46.000000 | 6.500000e+01 | 61.000000 | 9.700000e+01 | 2.000000e+00 | -5.450623e-07 | -4.032686e-07 | -1.347659e-08 | -2.890140e-08 | 1.131168e-07 | -4.175154e-08 | 7.100000e+01 | 5.400000e+01 | 56.000000 | 72.000000 | 82.000000 | 66.000000 | 48.000000 | 5.200000e+01 | 52.999997 | 7.600000e+01 | 106.000000 | 48.000000 | 60.999999 | 73.000000 | 73.000000 | 61.000000 | 78.000000 | 71.999999 | 63.999999 | 56.999999 | 82.000000 | 1.290000e+02 | -6.404821e-07 | -6.205741e-07 | -9.954905e-08 | 2.976238e-08 | -9.164984e-08 | -1.933697e-07 | 1.540000e+02 | 1.260000e+02 | 16.000000 | 7.200000e+01 | 67.000000 | 4.100000e+01 | 73.000000 | 61.000001 | 57.000001 | 63.000002 | 97.000000 | 65.000002 | 60.999998 | 71.000000 | 61.000002 | 70.000001 | 77.999999 | 5.400000e+01 | 108.000000 | 83.000000 | 144.000001 | 117.000001 | 8.170825e-07 | -8.944622e-08 | 9.377016e-08 | -2.152347e-08 | -9.805354e-08 | 1.100760e-07 | -1.284166e-07 | 1.450000e+02 | 1.470000e+02 | 1.500000e+02 | 122.000001 | 106.000000 | 106.000000 | 51.999998 | 55.999999 | 59.999999 | 81.999999 | 6.500000e+01 | 55.999997 | 69.000001 | 52.999997 | 70.000001 | 61.000002 | 94.000000 | 205.999996 | 168.000003 | 127.000002 | -1.907800e-08 | -6.678754e-07 | 3.626715e-07 | 7.653776e-08 | 3.391509e-08 | -1.837763e-07 | -1.002178e-07 | 9.562319e-08 | 5.449854e-07 | 9.500000e+01 | 188.000003 | 190.999996 | 98.000000 | 70.000000 | 49.999998 | 52.999998 | 57.000000 | 58.000001 | 52.000002 | 52.000001 | 76.000000 | 52.999998 | 53.999996 | 64.000001 | 7.700000e+01 | 161.999997 | 85.000000 | 1.135173e-07 | -0.000001 | 0.000001 | -7.649599e-07 | 1.421396e-07 | -3.134657e-08 | -1.488990e-07 | -2.217518e-07 | -7.524475e-07 | -8.247956e-07 | 2.124748e-07 | -0.000002 | 31.999998 | 60.000001 | 63.999999 | 51.999997 | 53.000000 | 50.000003 | 61.000000 | 51.000002 | 50.999997 | 75.000002 | 69.999999 | 60.999997 | 86.000000 | 19.999996 | -0.000002 | 0.000005 | 0.000002 | 1.000002 | -2.314611e-07 | -0.000002 | 3.276677e-07 | -1.048992e-07 | -9.582211e-08 | -1.728624e-07 | 4.348013e-07 | 0.000001 | 2.000000 | 0.000001 | 40.999999 | 73.000000 | 64.000000 | 53.000000 | 47.999999 | 52.000004 | 58.000004 | 5.200000e+01 | 52.999999 | 56.999997 | 64.000002 | 53.000000 | 77.000002 | 43.999999 | -0.000005 | 3.000005 | 0.000003 | -0.000002 | -0.000002 | 0.000001 | -1.099796e-08 | -1.249524e-07 | 8.047429e-10 | 1.957992e-07 | -5.361049e-07 | 0.000001 | 0.000002 | -7.203892e-07 | 32.000000 | 69.000001 | 47.000001 | 58.000002 | 51.999996 | 52.999996 | 53.999996 | 52.000001 | 56.000004 | 56.999997 | 58.999996 | 51.000000 | 66.000002 | 3.900000e+01 | -0.000001 | 1.000001 | 0.000004 | 0.000002 | 3.101954e-07 | -0.000002 | -2.671779e-07 | 5.450883e-08 | -1.799488e-07 | -2.672337e-07 | 3.815783e-07 | 0.000002 | 1.999999e+00 | 0.000002 | 26.000003 | 59.999999 | 47.999997 | 58.000000 | 52.999997 | 49.999999 | 5.200000e+01 | 50.999996 | 56.999999 | 62.999997 | 57.000003 | 57.999998 | 73.000001 | 56.999999 | 7.423447e-07 | 9.999991e-01 | 0.000005 | 0.000002 | 3.281200e-07 | -0.000002 | -1.307099e-07 | 3.196277e-07 | 5.418402e-08 | -2.917852e-07 | 7.308653e-07 | -0.000002 | 1.999998 | -5.223541e-09 | 26.000001 | 53.000002 | 5.000000e+01 | 63.000003 | 57.000000 | 53.000003 | 60.999999 | 56.999998 | 55.999997 | 64.000004 | 56.999997 | 60.999998 | 69.000000 | 64.999998 | 0.000005 | 1.000005e+00 | -0.000005 | 0.000002 | 3.892250e-07 | 6.972123e-07 | -2.291508e-07 | -2.135472e-07 | -4.984975e-07 | -8.258425e-07 | 0.000001 | -5.332055e-07 | 2.000003e+00 | -0.000002 | 32.000000 | 50.000000 | 66.000002 | 76.000002 | 60.999997 | 55.999997 | 58.999998 | 57.000004 | 59.999999 | 64.999999 | 51.000002 | 63.999998 | 6.100000e+01 | 59.000000 | -4.653743e-07 | -0.000005 | 0.000002 | -0.000002 | 0.000001 | -7.986391e-07 | -4.286650e-07 | -1.681014e-07 | 6.363970e-07 | -6.975870e-07 | -5.423990e-07 | 0.000001 | 0.000002 | 0.000002 | 51.000002 | 47.000002 | 69.000002 | 66.999997 | 58.999998 | 56.000002 | 59.999997 | 58.000000 | 6.100000e+01 | 63.000000 | 52.999998 | 66.000002 | 6.400000e+01 | 5.800000e+01 | -6.241514e-07 | -0.000005 | 1.000003 | 5.651978e-07 | -5.163183e-07 | 0.000002 | -1.174552e-07 | -5.947502e-08 | -2.880678e-07 | -0.000001 | 5.352320e-07 | 0.000002 | -0.000002 | -0.000002 | 59.999999 | 61.000001 | 77.000000 | 71.999997 | 64.000003 | 57.999997 | 62.999996 | 58.999998 | 65.999999 | 63.000000 | 58.000000 | 67.000003 | 72.000000 | 71.999999 | 11.000003 | -0.000005 | 9.999988e-01 | -3.174695e-07 | -0.000002 | -5.629809e-07 | -3.012789e-07 | -1.447285e-07 | -6.046403e-07 | 1.118729e-07 | -9.513843e-07 | 1.000001e+00 | 2.894738e-08 | 0.000004 | 76.000000 | 5.800000e+01 | 81.999998 | 82.999998 | 68.999996 | 63.000002 | 66.000002 | 64.000001 | 7.200000e+01 | 66.000002 | 64.000004 | 65.000000 | 76.000001 | 87.999999 | 32.000002 | -0.000002 | 2.999998 | -0.000002 | 9.265024e-07 | 0.000002 | 4.011770e-07 | 1.363091e-07 | 3.289930e-07 | -2.624320e-07 | -0.000002 | 2.000001 | -0.000003 | 1.100000e+01 | 92.000000 | 6.400000e+01 | 8.500000e+01 | 84.999998 | 78.999999 | 71.999999 | 69.000002 | 72.999999 | 85.999999 | 73.000002 | 70.999998 | 67.000000 | 83.999999 | 98.000000 | 2.800000e+01 | 0.000003 | 3.000001 | -0.000002 | -0.000002 | 7.528400e-07 | -1.202886e-08 | -1.592361e-07 | 5.095788e-07 | -4.052591e-08 | -2.873870e-07 | 1.999999e+00 | 0.000001 | 15.999998 | 9.800000e+01 | 8.500000e+01 | 88.000001 | 8.100000e+01 | 8.800000e+01 | 79.000001 | 72.999999 | 83.000000 | 91.000001 | 7.800000e+01 | 81.000000 | 84.000001 | 88.000000 | 1.220000e+02 | 35.000001 | -8.325330e-07 | 2.000000e+00 | -1.849482e-07 | 0.000002 | 2.427885e-08 | -2.188508e-07 | 1.379810e-07 | -1.391677e-07 | 1.906360e-07 | 4.654391e-07 | 1.000002 | -0.000001 | 3.300000e+01 | 1.350000e+02 | 96.000000 | 8.900000e+01 | 89.999999 | 96.000000 | 83.999999 | 9.000000e+01 | 97.999999 | 99.999999 | 9.000000e+01 | 90.000000 | 102.000000 | 72.999999 | 1.350000e+02 | 52.000001 | 0.000002 | 2.000001 | 7.859119e-07 | 2.466921e-07 | 1.444405e-07 | 5.295760e-08 | -1.867194e-08 | -2.868018e-07 | -7.280360e-07 | -6.257008e-07 | 2.000002 | 9.323434e-07 | 101.000000 | 1.290000e+02 | 110.000000 | 115.000000 | 97.999999 | 96.000001 | 91.000000 | 95.000001 | 101.000000 | 1.030000e+02 | 97.000000 | 92.000000 | 104.000000 | 101.000000 | 113.000001 | 59.000000 | -0.000001 | 3.000000e+00 | 7.765040e-07 | -1.484936e-08 | 5.969346e-07 | 4.756745e-08 | -9.525822e-08 | -1.043257e-07 | -3.057516e-08 | -7.851076e-07 | 2.999998e+00 | 0.000002 | 46.000000 | 1.560000e+02 | 9.400000e+01 | 117.000000 | 1.170000e+02 | 127.000000 | 112.000000 | 112.000000 | 128.000000 | 1.230000e+02 | 133.000000 | 121.000001 | 102.000000 | 9.200000e+01 | 1.500000e+02 | 51.000000 | -0.000002 | 3.000002 | 0.000002 | 5.335659e-07 | -5.266864e-07 | 8.280237e-08 | 3.033599e-08 | 5.283691e-08 | 1.380712e-07 | 1.159922e-07 | -0.000001 | -0.000002 | 0.000003 | 1.270000e+02 | 160.000000 | 1.040000e+02 | 58.999999 | 7.300000e+01 | 85.000000 | 82.000000 | 95.000000 | 92.000000 | 86.000000 | 90.000000 | 1.060000e+02 | 165.999997 | 112.999998 | -0.000001 | 2.139088e-07 | 1.000002 | -5.421757e-07 | 6.163737e-07 | -5.578727e-08 | 4.793912e-09 | -4.510589e-09 | -9.451520e-09 | -1.198971e-07 | -2.364927e-08 | -9.384266e-07 | -8.592667e-07 | 0.000002 | -7.577064e-07 | 109.999998 | 222.000005 | 213.999997 | 181.000000 | 163.000000 | 169.000001 | 175.999999 | 169.000001 | 171.999999 | 188.000002 | 187.000002 | 82.999999 | -0.000001 | 0.000002 | -0.000002 | -0.000002 | 4.965935e-07 | -2.344399e-07 | -1.488622e-08 | 1.608706e-08 | -3.893886e-11 | -3.305654e-09 | -5.438611e-09 | -4.320514e-08 | -2.151095e-07 | 3.743793e-07 | -6.565379e-07 | 6.560168e-08 | 2.924545e-07 | -2.304249e-08 | 6.100000e+01 | 1.260000e+02 | 1.420000e+02 | 1.620000e+02 | 1.830000e+02 | 171.000000 | 1.450000e+02 | 5.400000e+01 | 0.000002 | 3.771737e-07 | 5.207580e-07 | -1.732680e-07 | -6.984834e-07 | -2.398092e-07 | 2.374313e-07 | -4.985816e-08 | -2.682765e-10 | -2.551856e-09 | 0 |

| 2 | -1.628456e-11 | 2.116344e-10 | -1.459979e-10 | 2.426657e-09 | -2.665179e-11 | -2.594887e-09 | -2.792910e-08 | -3.333367e-08 | 6.900000e+01 | 4.800000e+01 | 9.682471e-07 | 3.000002 | -5.505288e-07 | 4.053924e-07 | 4.035032e-07 | 2.956210e-07 | 4.005417e-07 | -0.000001 | 5.516976e-08 | 1.300000e+02 | 3.200000e+01 | 9.006170e-08 | -2.751660e-08 | -2.676011e-08 | 1.534132e-09 | 3.965276e-09 | 4.171343e-09 | 5.597892e-10 | -2.459002e-10 | 1.018412e-09 | 4.727461e-09 | 4.497033e-09 | -4.694904e-09 | 8.493365e-08 | -8.031146e-08 | 1.985495e-07 | 1.770000e+02 | 173.000001 | 7.044741e-07 | -0.000002 | -0.000002 | -7.585758e-07 | 0.999997 | 0.000006 | 1.999996 | -1.279166e-07 | 0.000001 | 2.040000e+02 | 7.800000e+01 | 1.444539e-07 | -1.736492e-07 | -8.071513e-09 | -1.345314e-08 | -3.650504e-08 | 3.064484e-09 | 1.395653e-09 | -5.678459e-10 | -8.652231e-10 | 6.095701e-09 | -2.268324e-08 | 1.082306e-07 | 2.055711e-07 | 4.611180e-07 | 3.908779e-07 | 127.999997 | 184.999995 | 0.000002 | 0.000001 | 0.999996 | 1.000004 | -4.594104e-07 | 0.000004 | 5.000005 | 0.000001 | 5.100000e+01 | 200.999995 | -0.000002 | 7.057845e-07 | -1.318873e-07 | -1.672773e-07 | 2.125031e-09 | -8.742525e-08 | -1.006425e-08 | -5.139340e-09 | 2.001768e-09 | -9.364624e-10 | -1.518954e-08 | -4.168295e-08 | 1.493545e-07 | 7.656082e-07 | -3.514349e-07 | -9.688781e-07 | 7.300000e+01 | 212.000004 | 17.999997 | 0.000004 | 0.999997 | -0.000003 | -0.000003 | -0.000004 | -6.836428e-07 | -3.299791e-07 | 121.999999 | 189.000002 | 5.257434e-07 | -3.949514e-07 | -1.805364e-07 | 4.216997e-07 | -1.558985e-07 | -1.001893e-07 | -4.949160e-09 | 1.981593e-08 | 2.329049e-09 | -5.676910e-09 | -1.800060e-08 | -3.427830e-08 | -5.326159e-07 | 2.273738e-07 | 6.555034e-07 | -0.000001 | 44.000000 | 219.999997 | 97.000000 | 0.000003 | 2.000004 | 9.626116e-07 | 6.564152e-07 | 1.000003 | 0.999998 | -0.000005 | 1.770000e+02 | 166.000002 | 5.942894e-07 | 9.570876e-07 | 7.753735e-07 | -8.642634e-07 | -4.548926e-07 | 1.847391e-08 | -1.021340e-08 | 1.077761e-08 | -2.561862e-09 | -4.695927e-08 | 4.582200e-08 | -3.727119e-08 | 1.100016e-07 | 0.000002 | -0.000002 | 0.000001 | 41.999999 | 206.000005 | 197.999999 | 6.513797e-07 | -0.000001 | 0.000005 | -0.000004 | 0.000004 | -0.000001 | 45.999999 | 209.000003 | 170.999998 | 2.016850e-07 | -0.000002 | -4.557509e-07 | 5.144796e-07 | -1.955981e-07 | 1.345243e-07 | 1.621610e-07 | 1.430707e-08 | -4.618929e-09 | 4.971934e-09 | 6.497929e-08 | 2.643760e-07 | -2.410009e-07 | -0.000002 | 0.000002 | 0.000001 | 4.900000e+01 | 224.000005 | 1.940000e+02 | 162.000001 | 20.000002 | -0.000001 | -0.000004 | -0.000005 | 47.999998 | 203.000001 | 195.999999 | 194.999998 | 0.000005 | -7.583148e-07 | -0.000002 | 8.919840e-07 | -6.656718e-07 | -5.450623e-07 | -4.032686e-07 | -1.347659e-08 | -2.890140e-08 | 1.131168e-07 | -4.175154e-08 | -5.971974e-07 | 3.715096e-07 | 0.000001 | 0.000001 | -0.000001 | 57.000000 | 221.999997 | 1.800000e+02 | 202.999997 | 2.150000e+02 | 194.999999 | 194.999999 | 207.000000 | 213.000002 | 190.000000 | 183.000001 | 222.999998 | 1.999996 | -0.000002 | 0.000002 | 0.000001 | -7.762443e-07 | -6.404821e-07 | -6.205741e-07 | -9.954905e-08 | 2.976238e-08 | -9.164984e-08 | -1.933697e-07 | -3.981918e-07 | 4.334051e-07 | -0.000002 | 7.406876e-07 | -0.000003 | 1.130000e+02 | 223.000002 | 166.000000 | 165.000001 | 173.999998 | 185.000001 | 186.999998 | 181.000001 | 176.000001 | 168.999999 | 182.000001 | 222.000000 | 8.100000e+01 | 0.000001 | 0.000002 | 0.000001 | 0.000002 | 8.170825e-07 | -8.944622e-08 | 9.377016e-08 | -2.152347e-08 | -9.805354e-08 | 1.100760e-07 | -1.284166e-07 | -8.785177e-07 | -5.893633e-07 | 9.804652e-07 | 0.000001 | 165.999999 | 243.000000 | 233.000002 | 223.999995 | 183.000001 | 186.000002 | 1.800000e+02 | 191.999998 | 187.000002 | 191.000000 | 189.000001 | 232.000000 | 119.000000 | -0.000006 | 0.000005 | 0.000002 | -1.907800e-08 | -6.678754e-07 | 3.626715e-07 | 7.653776e-08 | 3.391509e-08 | -1.837763e-07 | -1.002178e-07 | 9.562319e-08 | 5.449854e-07 | 6.690875e-07 | 0.000002 | 0.000003 | 129.000000 | 230.000005 | 231.000002 | 233.999995 | 222.000001 | 215.000002 | 223.999995 | 215.000001 | 216.000002 | 225.000002 | 221.000001 | 255.000004 | 4.500000e+01 | -0.000003 | -0.000002 | 1.135173e-07 | -0.000001 | 0.000001 | -7.649599e-07 | 1.421396e-07 | -3.134657e-08 | -1.488990e-07 | -2.217518e-07 | -7.524475e-07 | -8.247956e-07 | 2.124748e-07 | -0.000002 | 0.000002 | 108.000000 | 221.000000 | 213.000004 | 223.000003 | 215.000002 | 220.999997 | 230.999996 | 216.999998 | 230.000001 | 188.000000 | 222.000000 | 239.000005 | 15.000004 | -0.000002 | 0.000005 | 0.000002 | -0.000001 | -2.314611e-07 | -0.000002 | 3.276677e-07 | -1.048992e-07 | -9.582211e-08 | -1.728624e-07 | 4.348013e-07 | 0.000001 | 0.000002 | 0.000001 | 0.000003 | 71.000000 | 250.999996 | 229.000000 | 189.999998 | 192.000001 | 200.999998 | 1.990000e+02 | 195.000001 | 199.999999 | 200.000002 | 214.000000 | 216.000002 | -0.000003 | -0.000005 | -0.000002 | 0.000003 | -0.000002 | -0.000002 | 0.000001 | -1.099796e-08 | -1.249524e-07 | 8.047429e-10 | 1.957992e-07 | -5.361049e-07 | 0.000001 | 0.000002 | -7.203892e-07 | 0.000001 | 36.000001 | 235.999998 | 191.000001 | 186.999999 | 182.999999 | 176.000000 | 174.000000 | 174.000001 | 176.999999 | 179.999999 | 215.999998 | 194.000001 | 5.151848e-07 | -0.000001 | 0.000001 | 0.000004 | 0.000002 | 3.101954e-07 | -0.000002 | -2.671779e-07 | 5.450883e-08 | -1.799488e-07 | -2.672337e-07 | 3.815783e-07 | 0.000002 | -8.765240e-07 | 0.000002 | 0.000005 | 10.999995 | 231.999995 | 191.999999 | 189.000001 | 185.999999 | 1.880000e+02 | 185.999999 | 183.000000 | 186.000000 | 182.999999 | 213.000002 | 191.999998 | 0.000001 | 7.423447e-07 | 1.327465e-07 | 0.000005 | 0.000002 | 3.281200e-07 | -0.000002 | -1.307099e-07 | 3.196277e-07 | 5.418402e-08 | -2.917852e-07 | 7.308653e-07 | -0.000002 | 0.000002 | -5.223541e-09 | 0.000004 | 1.999999 | 2.340000e+02 | 195.999998 | 187.000000 | 185.000001 | 180.999999 | 180.999998 | 181.000001 | 182.000001 | 183.000001 | 206.000000 | 191.000001 | 0.000002 | 0.000005 | 4.826824e-07 | -0.000005 | 0.000002 | 3.892250e-07 | 6.972123e-07 | -2.291508e-07 | -2.135472e-07 | -4.984975e-07 | -8.258425e-07 | 0.000001 | -5.332055e-07 | 5.139264e-07 | -0.000002 | -0.000004 | 40.999999 | 225.999998 | 183.000001 | 189.000000 | 184.999999 | 181.000000 | 182.000001 | 181.000001 | 181.000001 | 185.000000 | 188.999999 | 2.230000e+02 | 11.000000 | -4.653743e-07 | -0.000005 | 0.000002 | -0.000002 | 0.000001 | -7.986391e-07 | -4.286650e-07 | -1.681014e-07 | 6.363970e-07 | -6.975870e-07 | -5.423990e-07 | 0.000001 | 0.000002 | 0.000002 | 0.000005 | 186.000001 | 207.999998 | 181.000001 | 186.000000 | 181.999999 | 181.000000 | 181.000001 | 1.800000e+02 | 182.000000 | 179.000000 | 180.000000 | 2.120000e+02 | 1.350000e+02 | -6.241514e-07 | -0.000005 | 0.000002 | 5.651978e-07 | -5.163183e-07 | 0.000002 | -1.174552e-07 | -5.947502e-08 | -2.880678e-07 | -0.000001 | 5.352320e-07 | 0.000002 | -0.000002 | -0.000002 | -0.000005 | 144.000000 | 220.999998 | 182.000001 | 183.000000 | 180.000001 | 181.999999 | 178.999999 | 179.999999 | 176.999999 | 188.000001 | 222.999998 | 151.999999 | 0.999998 | 0.000004 | -0.000005 | -2.677840e-07 | -3.174695e-07 | -0.000002 | -5.629809e-07 | -3.012789e-07 | -1.447285e-07 | -6.046403e-07 | 1.118729e-07 | -9.513843e-07 | -2.911069e-07 | 2.894738e-08 | 0.000004 | 0.000005 | -3.351748e-07 | 93.000001 | 225.999998 | 185.000000 | 181.000001 | 180.999999 | 180.000001 | 1.770000e+02 | 191.000000 | 213.000000 | 67.000001 | 0.000005 | 0.000003 | 0.000005 | -0.000002 | -0.000001 | -0.000002 | 9.265024e-07 | 0.000002 | 4.011770e-07 | 1.363091e-07 | 3.289930e-07 | -2.624320e-07 | -0.000002 | -0.000002 | -0.000003 | 3.360567e-07 | 0.000004 | 9.999977e-01 | 2.258955e-07 | 84.000001 | 226.000001 | 180.000000 | 182.000001 | 181.999998 | 177.999999 | 226.000001 | 43.999998 | -0.000004 | 1.000000 | -0.000001 | -2.610268e-07 | 0.000003 | -0.000002 | -0.000002 | -0.000002 | 7.528400e-07 | -1.202886e-08 | -1.592361e-07 | 5.095788e-07 | -4.052591e-08 | -2.873870e-07 | 5.500636e-07 | 0.000001 | -0.000002 | 6.275720e-07 | 4.274649e-07 | 0.000001 | 5.958969e-07 | 1.540000e+02 | 213.000001 | 178.000001 | 172.999998 | 216.000001 | 1.180000e+02 | 0.000001 | 0.000001 | 0.999996 | 6.923674e-07 | 0.000001 | -8.325330e-07 | -5.767398e-07 | -1.849482e-07 | 0.000002 | 2.427885e-08 | -2.188508e-07 | 1.379810e-07 | -1.391677e-07 | 1.906360e-07 | 4.654391e-07 | 0.000001 | -0.000001 | -4.551377e-07 | 7.378563e-07 | -0.000002 | 1.000002e+00 | 0.000003 | 29.000002 | 221.999998 | 1.800000e+02 | 182.000000 | 223.000000 | 1.000002e+00 | 0.000005 | -0.000004 | -0.000004 | 1.000000e+00 | 0.000002 | 0.000002 | 0.000001 | 7.859119e-07 | 2.466921e-07 | 1.444405e-07 | 5.295760e-08 | -1.867194e-08 | -2.868018e-07 | -7.280360e-07 | -6.257008e-07 | 0.000001 | 9.323434e-07 | 0.000002 | 8.744090e-07 | 0.000002 | 1.000005 | 0.000003 | 0.000005 | 197.999999 | 187.000003 | 194.000002 | 1.530000e+02 | 0.000003 | -0.000003 | 0.000005 | 0.000002 | 1.000002 | 0.000001 | -0.000001 | 3.297868e-07 | 7.765040e-07 | -1.484936e-08 | 5.969346e-07 | 4.756745e-08 | -9.525822e-08 | -1.043257e-07 | -3.057516e-08 | -7.851076e-07 | -8.448512e-07 | 0.000002 | -0.000001 | 8.809801e-07 | 1.723858e-07 | 0.000001 | -7.570235e-07 | 0.000001 | 135.000000 | 196.999999 | 199.000002 | 8.800000e+01 | -0.000005 | 1.000000 | 0.000005 | -1.852046e-07 | 1.000000e+00 | 0.000002 | -0.000002 | -0.000001 | 0.000002 | 5.335659e-07 | -5.266864e-07 | 8.280237e-08 | 3.033599e-08 | 5.283691e-08 | 1.380712e-07 | 1.159922e-07 | -0.000001 | -0.000002 | 0.000003 | -3.723668e-07 | -0.000002 | 7.009453e-07 | 0.999999 | 3.933536e-07 | 58.999999 | 199.000005 | 197.000002 | 51.000001 | 0.000002 | 0.999998 | -5.581073e-07 | 0.000002 | -0.000002 | -0.000001 | 2.139088e-07 | 0.000002 | -5.421757e-07 | 6.163737e-07 | -5.578727e-08 | 4.793912e-09 | -4.510589e-09 | -9.451520e-09 | -1.198971e-07 | -2.364927e-08 | -9.384266e-07 | -8.592667e-07 | 0.000002 | -7.577064e-07 | -0.000002 | 0.000001 | 2.000002 | 0.000002 | -0.000002 | 201.000001 | 223.999998 | 26.999997 | -0.000003 | 2.999998 | -0.000002 | -0.000002 | -0.000001 | 0.000002 | -0.000002 | -0.000002 | 4.965935e-07 | -2.344399e-07 | -1.488622e-08 | 1.608706e-08 | -3.893886e-11 | -3.305654e-09 | -5.438611e-09 | -4.320514e-08 | -2.151095e-07 | 3.743793e-07 | -6.565379e-07 | 6.560168e-08 | 2.924545e-07 | -2.304249e-08 | 1.000000e+00 | -3.426212e-07 | -6.002562e-07 | 9.900000e+01 | 1.280000e+02 | -0.000002 | 6.155732e-07 | 1.000001e+00 | 0.000002 | 3.771737e-07 | 5.207580e-07 | -1.732680e-07 | -6.984834e-07 | -2.398092e-07 | 2.374313e-07 | -4.985816e-08 | -2.682765e-10 | -2.551856e-09 | 0 |

| 3 | -1.628456e-11 | 2.116344e-10 | -1.459979e-10 | 2.426657e-09 | -2.665179e-11 | -2.594887e-09 | -2.792910e-08 | -3.333367e-08 | 8.642878e-08 | -4.371375e-07 | 9.682471e-07 | -0.000002 | -5.505288e-07 | 4.053924e-07 | 4.035032e-07 | 2.956210e-07 | 4.005417e-07 | -0.000001 | 5.516976e-08 | -2.068072e-07 | -9.467687e-08 | 9.006170e-08 | -2.751660e-08 | -2.676011e-08 | 1.534132e-09 | 3.965276e-09 | 4.171343e-09 | 5.597892e-10 | -2.459002e-10 | 1.018412e-09 | 4.727461e-09 | 4.497033e-09 | -4.694904e-09 | 8.493365e-08 | -8.031146e-08 | 1.985495e-07 | -1.276844e-07 | -0.000001 | 7.044741e-07 | -0.000002 | -0.000002 | -7.585758e-07 | -0.000006 | 0.000006 | 0.000002 | -1.279166e-07 | 0.000001 | -9.767781e-07 | -6.219337e-07 | 1.444539e-07 | -1.736492e-07 | -8.071513e-09 | -1.345314e-08 | -3.650504e-08 | 3.064484e-09 | 1.395653e-09 | -5.678459e-10 | -8.652231e-10 | 6.095701e-09 | -2.268324e-08 | 1.082306e-07 | 2.055711e-07 | 4.611180e-07 | 3.908779e-07 | 0.000002 | -0.000002 | 0.000002 | 1.000000 | -0.000003 | 0.000003 | 1.290000e+02 | 197.000002 | 181.000000 | 207.000005 | 2.370000e+02 | 219.000005 | 180.999997 | 1.610000e+02 | 1.110000e+02 | 9.700000e+01 | 7.500000e+01 | 4.400000e+01 | -1.006425e-08 | -5.139340e-09 | 2.001768e-09 | -9.364624e-10 | -1.518954e-08 | -4.168295e-08 | 1.493545e-07 | 7.656082e-07 | -3.514349e-07 | -9.688781e-07 | 8.386749e-07 | 0.000002 | 0.000005 | 2.000005 | -0.000003 | -0.000003 | 203.999995 | 214.000003 | 1.880000e+02 | 2.080000e+02 | 247.000002 | 217.000003 | 2.300000e+02 | 2.220000e+02 | 2.220000e+02 | 2.400000e+02 | 2.170000e+02 | 2.520000e+02 | 9.000000e+00 | 1.981593e-08 | 2.329049e-09 | -5.676910e-09 | -1.800060e-08 | -3.427830e-08 | -5.326159e-07 | 2.273738e-07 | 6.555034e-07 | -0.000001 | -0.000002 | 0.000002 | 0.000005 | 2.999999 | 0.000002 | 9.626116e-07 | 2.170000e+02 | 206.000004 | 209.000005 | 213.000000 | 2.010000e+02 | 174.000002 | 2.000000e+02 | 1.990000e+02 | 1.940000e+02 | 2.030000e+02 | 2.010000e+02 | 1.860000e+02 | -1.021340e-08 | 1.077761e-08 | -2.561862e-09 | -4.695927e-08 | 4.582200e-08 | -3.727119e-08 | 1.100016e-07 | 0.000002 | -0.000002 | 0.000001 | -0.000002 | -0.000005 | -0.000001 | 3.999999e+00 | -0.000001 | 3.999997 | 193.000001 | 200.000002 | 201.000002 | 197.000000 | 199.000002 | 185.000003 | 2.300000e+02 | 189.999995 | 1.960000e+02 | 1.980000e+02 | 2.100000e+02 | 2.060000e+02 | 1.880000e+02 | 2.200000e+01 | -4.618929e-09 | 4.971934e-09 | 6.497929e-08 | 2.643760e-07 | -2.410009e-07 | -0.000002 | 0.000002 | 0.000001 | -8.460442e-08 | 0.000003 | -9.104431e-07 | 4.999997 | 0.000005 | 13.000001 | 194.999999 | 198.999998 | 202.000002 | 219.000000 | 229.999995 | 197.000001 | 198.000001 | 1.650000e+02 | 218.000001 | 2.160000e+02 | 2.120000e+02 | 2.210000e+02 | 2.250000e+02 | 8.100000e+01 | -2.890140e-08 | 1.131168e-07 | -4.175154e-08 | -5.971974e-07 | 3.715096e-07 | 0.000001 | 0.000001 | -0.000001 | 0.000001 | -0.000004 | 1.262333e-07 | 4.999995 | -4.162347e-07 | 14.999996 | 237.000002 | 200.000002 | 196.000000 | 190.000000 | 192.999998 | 253.000005 | 223.999996 | 223.000005 | 226.000000 | 216.999997 | 2.100000e+02 | 1.840000e+02 | 1.900000e+01 | -9.954905e-08 | 2.976238e-08 | -9.164984e-08 | -1.933697e-07 | -3.981918e-07 | 4.334051e-07 | -0.000002 | 7.406876e-07 | -0.000003 | -9.455209e-07 | 0.000004 | 0.000004 | 5.000001 | -0.000002 | 14.000000 | 223.999995 | 201.000001 | 205.999998 | 215.999998 | 186.000002 | 188.000000 | 2.120000e+02 | 192.999997 | 194.000003 | 191.999997 | 219.999991 | 1.210000e+02 | -8.944622e-08 | 9.377016e-08 | -2.152347e-08 | -9.805354e-08 | 1.100760e-07 | -1.284166e-07 | -8.785177e-07 | -5.893633e-07 | 9.804652e-07 | 0.000001 | -0.000003 | -0.000005 | -0.000004 | 2.999995 | -0.000002 | 14.000003 | 2.260000e+02 | 199.000000 | 209.000001 | 214.999999 | 213.000000 | 199.000001 | 200.000000 | 202.999995 | 203.000000 | 189.999996 | 2.170000e+02 | 1.420000e+02 | 3.626715e-07 | 7.653776e-08 | 3.391509e-08 | -1.837763e-07 | -1.002178e-07 | 9.562319e-08 | 5.449854e-07 | 6.690875e-07 | 0.000002 | 0.000003 | -0.000002 | 0.000003 | -0.000003 | 1.000002 | -0.000004 | 4.999998 | 211.000002 | 210.999999 | 211.999998 | 197.000001 | 204.000001 | 203.000002 | 2.000000e+02 | 201.999999 | 200.000002 | 1.920000e+02 | 202.000001 | 206.000000 | -7.649599e-07 | 1.421396e-07 | -3.134657e-08 | -1.488990e-07 | -2.217518e-07 | -7.524475e-07 | -8.247956e-07 | 2.124748e-07 | -0.000002 | 0.000002 | -0.000002 | 0.000004 | 0.000005 | -0.000002 | -0.000002 | 144.000000 | 210.000002 | 189.000001 | 233.000002 | 252.999998 | 228.999998 | 222.999998 | 214.999998 | 212.000005 | 204.999999 | 210.000003 | 196.000004 | 2.090000e+02 | 62.000001 | 3.276677e-07 | -1.048992e-07 | -9.582211e-08 | -1.728624e-07 | 4.348013e-07 | 0.000001 | 0.000002 | 0.000001 | 0.000003 | 0.000002 | 0.000002 | 1.999995 | 0.000001 | 0.000002 | 222.999996 | 1.890000e+02 | 196.000002 | 164.000000 | 146.000000 | 221.000000 | 180.000002 | 199.999999 | 207.999997 | 207.000001 | 201.999999 | 196.000002 | 205.000000 | 163.000001 | -1.099796e-08 | -1.249524e-07 | 8.047429e-10 | 1.957992e-07 | -5.361049e-07 | 0.000001 | 0.000002 | -7.203892e-07 | 0.000001 | 0.000003 | 0.000001 | 4.000004 | 0.000002 | 49.999998 | 233.000001 | 178.999998 | 218.000001 | 190.999999 | 155.000000 | 205.000002 | 217.000000 | 2.270000e+02 | 185.000000 | 201.000000 | 203.000004 | 200.000001 | 2.000000e+02 | 200.000002 | -2.671779e-07 | 5.450883e-08 | -1.799488e-07 | -2.672337e-07 | 3.815783e-07 | 0.000002 | -8.765240e-07 | 0.000002 | 0.000005 | 0.999998 | 0.000003 | -0.000001 | -0.000003 | 154.000000 | 2.110000e+02 | 199.000000 | 216.999998 | 190.000001 | 228.000001 | 208.000000 | 201.999998 | 231.000001 | 2.050000e+02 | 1.880000e+02 | 193.999997 | 200.000003 | 2.000000e+02 | 212.000002 | 9.000000e+00 | 3.196277e-07 | 5.418402e-08 | -2.917852e-07 | 9.999992e-01 | 1.999999 | 1.999998 | 2.999998e+00 | 0.000004 | 0.000005 | -1.976124e-07 | 44.999999 | 161.000000 | 177.000000 | 145.000000 | 220.000000 | 222.999999 | 141.000000 | 223.999999 | 218.999999 | 223.999996 | 215.000002 | 205.000002 | 2.250000e+02 | 206.999995 | 207.999997 | 1.970000e+02 | 2.240000e+02 | 3.900000e+01 | -2.135472e-07 | 2.000001e+00 | 2.999999e+00 | 0.000001 | -5.332055e-07 | 5.139264e-07 | -0.000002 | -0.000004 | -0.000002 | 101.000001 | 174.000000 | 165.000000 | 171.000001 | 174.999999 | 195.999998 | 232.000004 | 237.000001 | 222.999999 | 174.999999 | 1.800000e+02 | 171.000001 | 1.960000e+02 | 192.000003 | 186.999997 | 189.999995 | 191.000004 | 2.160000e+02 | 1.700000e+01 | -1.681014e-07 | 6.363970e-07 | -6.975870e-07 | -5.423990e-07 | 6.000001 | 2.000002 | 29.000001 | 84.000000 | 150.999999 | 147.000000 | 113.999999 | 164.000000 | 196.000001 | 211.999998 | 207.000000 | 2.040000e+02 | 208.000000 | 204.999999 | 190.000001 | 1.930000e+02 | 1.960000e+02 | 1.850000e+02 | 180.000000 | 182.000000 | 1.840000e+02 | 1.840000e+02 | 216.000002 | 1.400000e+01 | -5.947502e-08 | -2.880678e-07 | 101.000000 | 1.340000e+02 | 144.000002 | 163.999999 | 161.999999 | 144.000000 | 109.000000 | 113.000000 | 169.000000 | 193.999998 | 204.000002 | 209.999999 | 212.000002 | 203.000001 | 199.000001 | 188.999999 | 176.999999 | 178.999998 | 188.000002 | 204.000003 | 205.000005 | 2.010000e+02 | 1.990000e+02 | 190.000001 | 2.190000e+02 | 1.600000e+01 | -1.447285e-07 | 9.400000e+01 | 1.930000e+02 | 1.260000e+02 | 6.400000e+01 | 3.200000e+01 | 11.000002 | 90.000000 | 1.520000e+02 | 170.999999 | 191.000000 | 199.000000 | 204.000002 | 209.000002 | 207.999998 | 1.900000e+02 | 186.999999 | 180.000001 | 214.000002 | 233.000001 | 204.000005 | 182.000002 | 176.999998 | 175.000000 | 178.000005 | 1.760000e+02 | 215.000007 | 1.700000e+01 | 2.200000e+01 | 1.870000e+02 | 2.160000e+02 | 215.999999 | 201.999998 | 199.999997 | 1.890000e+02 | 195.000001 | 1.960000e+02 | 1.960000e+02 | 195.999998 | 199.999999 | 204.000001 | 199.999998 | 204.999999 | 196.000001 | 215.999998 | 255.000000 | 143.000000 | 90.000000 | 190.000005 | 1.630000e+02 | 171.000000 | 175.999995 | 179.999997 | 180.000004 | 2.110000e+02 | 1.900000e+01 | 1.090000e+02 | 1.960000e+02 | 1.720000e+02 | 1.960000e+02 | 2.080000e+02 | 214.999998 | 222.999998 | 2.100000e+02 | 2.050000e+02 | 202.999998 | 2.010000e+02 | 2.060000e+02 | 210.999999 | 214.000003 | 200.000002 | 219.000001 | 1.870000e+02 | 31.000004 | 0.000001 | 27.000001 | 2.280000e+02 | 165.000001 | 1.790000e+02 | 1.810000e+02 | 1.800000e+02 | 179.000000 | 2.060000e+02 | 2.900000e+01 | 4.600000e+01 | 1.900000e+02 | 2.140000e+02 | 1.950000e+02 | 178.999996 | 187.999999 | 1.930000e+02 | 2.020000e+02 | 205.000001 | 2.120000e+02 | 210.000000 | 209.000001 | 199.999999 | 1.830000e+02 | 197.999999 | 109.000000 | 9.842208e-07 | 0.000005 | -0.000004 | 44.000002 | 1.880000e+02 | 168.000004 | 176.999996 | 164.999996 | 1.750000e+02 | 1.760000e+02 | 1.760000e+02 | 2.500000e+01 | -1.867194e-08 | -2.868018e-07 | 9.100000e+01 | 1.930000e+02 | 210.999997 | 2.050000e+02 | 202.000003 | 2.010000e+02 | 199.000001 | 188.000001 | 189.999997 | 191.000001 | 183.000000 | 211.000004 | 152.000000 | 8.867231e-07 | 0.000003 | 4.999995 | 0.000005 | 12.999999 | 198.999999 | 190.000003 | 192.999998 | 1.970000e+02 | 1.990000e+02 | 2.030000e+02 | 2.120000e+02 | 5.900000e+01 | -9.525822e-08 | -1.043257e-07 | -3.057516e-08 | -7.851076e-07 | 2.200000e+01 | 62.000000 | 152.000000 | 2.050000e+02 | 1.860000e+02 | 232.000002 | 2.290000e+02 | 182.999998 | 214.999995 | 164.000003 | 7.999998 | 8.076585e-07 | 2.000003 | -0.000006 | 0.000005 | 9.999992e-01 | 1.840000e+02 | 137.000002 | 155.000001 | 165.000005 | 136.000003 | 1.290000e+02 | 1.130000e+02 | 1.800000e+01 | 3.033599e-08 | 5.283691e-08 | 1.000000e+00 | 1.159922e-07 | -0.000001 | -0.000002 | 0.000003 | -3.723668e-07 | -0.000002 | 7.009453e-07 | 20.000000 | 3.933536e-07 | -0.000002 | -0.000002 | -0.000001 | 0.000002 | 0.000002 | -0.000005 | -5.581073e-07 | 0.000002 | -0.000002 | -0.000001 | 2.139088e-07 | 0.000002 | -5.421757e-07 | 6.163737e-07 | -5.578727e-08 | 4.793912e-09 | -4.510589e-09 | -9.451520e-09 | -1.198971e-07 | -2.364927e-08 | -9.384266e-07 | -8.592667e-07 | 0.000002 | -7.577064e-07 | -0.000002 | 0.000001 | 0.000003 | 0.000002 | -0.000002 | 0.000002 | -0.000003 | 0.000001 | -0.000003 | 0.000001 | -0.000002 | -0.000002 | -0.000001 | 0.000002 | -0.000002 | -0.000002 | 4.965935e-07 | -2.344399e-07 | -1.488622e-08 | 1.608706e-08 | -3.893886e-11 | -3.305654e-09 | -5.438611e-09 | -4.320514e-08 | -2.151095e-07 | 3.743793e-07 | -6.565379e-07 | 6.560168e-08 | 2.924545e-07 | -2.304249e-08 | 9.522988e-07 | -3.426212e-07 | -6.002562e-07 | -3.102456e-07 | 8.776177e-07 | -0.000002 | 6.155732e-07 | -3.593068e-07 | 0.000002 | 3.771737e-07 | 5.207580e-07 | -1.732680e-07 | -6.984834e-07 | -2.398092e-07 | 2.374313e-07 | -4.985816e-08 | -2.682765e-10 | -2.551856e-09 | 9 |

| 4 | -1.628456e-11 | 2.116344e-10 | -1.459979e-10 | 2.426657e-09 | -2.665179e-11 | -2.594887e-09 | -2.792910e-08 | -3.333367e-08 | 8.642878e-08 | -4.371375e-07 | 1.930000e+02 | 222.000000 | 2.050000e+02 | 1.790000e+02 | 1.970000e+02 | 1.700000e+02 | 1.770000e+02 | 201.000007 | 1.480000e+02 | -2.068072e-07 | -9.467687e-08 | 9.006170e-08 | -2.751660e-08 | -2.676011e-08 | 1.534132e-09 | 3.965276e-09 | 4.171343e-09 | 5.597892e-10 | -2.459002e-10 | 1.018412e-09 | 4.727461e-09 | 4.497033e-09 | -4.694904e-09 | 8.493365e-08 | -8.031146e-08 | 1.985495e-07 | -1.276844e-07 | -0.000001 | 2.550000e+02 | 236.999996 | 240.999995 | 2.390000e+02 | 219.000004 | 206.000000 | 207.000000 | 2.100000e+02 | 221.000003 | -9.767781e-07 | -6.219337e-07 | 1.444539e-07 | -1.736492e-07 | -8.071513e-09 | -1.345314e-08 | -3.650504e-08 | 3.064484e-09 | 1.395653e-09 | -5.678459e-10 | -8.652231e-10 | 6.095701e-09 | -2.268324e-08 | 1.082306e-07 | 2.055711e-07 | 4.611180e-07 | 3.908779e-07 | 0.000002 | 24.000000 | 255.000005 | 227.000002 | 227.000003 | 232.000001 | 2.140000e+02 | 202.999998 | 204.999995 | 181.000003 | 2.250000e+02 | 0.000002 | -0.000002 | 7.057845e-07 | -1.318873e-07 | -1.672773e-07 | 2.125031e-09 | -8.742525e-08 | -1.006425e-08 | -5.139340e-09 | 2.001768e-09 | -9.364624e-10 | -1.518954e-08 | -4.168295e-08 | 1.493545e-07 | 7.656082e-07 | -3.514349e-07 | -9.688781e-07 | 8.386749e-07 | 93.000000 | 254.999997 | 214.000001 | 212.999998 | 211.000002 | 219.999995 | 214.000003 | 2.320000e+02 | 2.150000e+02 | 235.999998 | 66.000000 | 5.257434e-07 | -3.949514e-07 | -1.805364e-07 | 4.216997e-07 | -1.558985e-07 | -1.001893e-07 | -4.949160e-09 | 1.981593e-08 | 2.329049e-09 | -5.676910e-09 | -1.800060e-08 | -3.427830e-08 | -5.326159e-07 | 2.273738e-07 | 6.555034e-07 | -0.000001 | -0.000002 | 153.000000 | 254.999998 | 205.000000 | 195.000002 | 1.950000e+02 | 2.180000e+02 | 227.000002 | 233.999997 | 197.999999 | 1.900000e+02 | 162.000002 | 5.942894e-07 | 9.570876e-07 | 7.753735e-07 | -8.642634e-07 | -4.548926e-07 | 1.847391e-08 | -1.021340e-08 | 1.077761e-08 | -2.561862e-09 | -4.695927e-08 | 4.582200e-08 | -3.727119e-08 | 1.100016e-07 | 0.000002 | -0.000002 | 0.000001 | -0.000002 | 178.000001 | 253.000005 | 1.980000e+02 | 161.999999 | 215.000002 | 241.000000 | 228.000000 | 237.999996 | 207.000001 | 166.000002 | 194.000003 | 2.016850e-07 | -0.000002 | -4.557509e-07 | 5.144796e-07 | -1.955981e-07 | 1.345243e-07 | 1.621610e-07 | 1.430707e-08 | -4.618929e-09 | 4.971934e-09 | 6.497929e-08 | 2.643760e-07 | -2.410009e-07 | -0.000002 | 0.000002 | 0.000001 | -8.460442e-08 | 188.999996 | 2.510000e+02 | 196.999998 | 170.000000 | 232.000002 | 205.000002 | 251.999998 | 238.999999 | 211.999997 | 166.999999 | 206.000000 | 0.000005 | -7.583148e-07 | -0.000002 | 8.919840e-07 | -6.656718e-07 | -5.450623e-07 | -4.032686e-07 | -1.347659e-08 | -2.890140e-08 | 1.131168e-07 | -4.175154e-08 | -5.971974e-07 | 3.715096e-07 | 0.000001 | 0.000001 | -0.000001 | 0.000001 | 188.000003 | 2.490000e+02 | 199.000002 | 1.860000e+02 | 255.000003 | 79.000000 | 254.999997 | 240.000002 | 214.999997 | 177.000001 | 201.000002 | 0.000002 | -0.000002 | 0.000002 | 0.000001 | -7.762443e-07 | -6.404821e-07 | -6.205741e-07 | -9.954905e-08 | 2.976238e-08 | -9.164984e-08 | -1.933697e-07 | -3.981918e-07 | 4.334051e-07 | -0.000002 | 7.406876e-07 | -0.000003 | -9.455209e-07 | 184.999998 | 246.999997 | 204.000001 | 197.999999 | 255.000000 | 27.000001 | 246.999998 | 245.999998 | 223.000005 | 192.999999 | 188.999998 | 3.125923e-07 | 0.000001 | 0.000002 | 0.000001 | 0.000002 | 8.170825e-07 | -8.944622e-08 | 9.377016e-08 | -2.152347e-08 | -9.805354e-08 | 1.100760e-07 | -1.284166e-07 | -8.785177e-07 | -5.893633e-07 | 9.804652e-07 | 0.000001 | -0.000003 | 155.000002 | 253.999999 | 206.000004 | 209.000003 | 255.000000 | -6.622733e-07 | 230.999997 | 255.000000 | 221.000004 | 213.000000 | 171.999998 | -0.000002 | -0.000006 | 0.000005 | 0.000002 | -1.907800e-08 | -6.678754e-07 | 3.626715e-07 | 7.653776e-08 | 3.391509e-08 | -1.837763e-07 | -1.002178e-07 | 9.562319e-08 | 5.449854e-07 | 6.690875e-07 | 0.000002 | 0.000003 | -0.000002 | 99.000000 | 254.999997 | 205.999997 | 218.999996 | 252.000002 | -0.000003 | 179.000000 | 255.000001 | 219.000002 | 227.000003 | 148.000000 | 8.404825e-07 | -0.000003 | -0.000002 | 1.135173e-07 | -0.000001 | 0.000001 | -7.649599e-07 | 1.421396e-07 | -3.134657e-08 | -1.488990e-07 | -2.217518e-07 | -7.524475e-07 | -8.247956e-07 | 2.124748e-07 | -0.000002 | 0.000002 | -0.000002 | 43.000000 | 254.999995 | 209.000002 | 217.999997 | 227.999996 | 0.000004 | 96.000000 | 254.999999 | 220.000001 | 243.000002 | 127.000000 | -0.000005 | -0.000002 | 0.000005 | 0.000002 | -0.000001 | -2.314611e-07 | -0.000002 | 3.276677e-07 | -1.048992e-07 | -9.582211e-08 | -1.728624e-07 | 4.348013e-07 | 0.000001 | 0.000002 | 0.000001 | 0.000003 | 0.000002 | 0.000002 | 251.000003 | 217.000004 | 221.000001 | 187.999999 | -5.688553e-07 | 47.000001 | 255.000002 | 220.000001 | 251.999996 | 103.999999 | -0.000003 | -0.000005 | -0.000002 | 0.000003 | -0.000002 | -0.000002 | 0.000001 | -1.099796e-08 | -1.249524e-07 | 8.047429e-10 | 1.957992e-07 | -5.361049e-07 | 0.000001 | 0.000002 | -7.203892e-07 | 0.000001 | 0.000003 | 0.000001 | 218.000002 | 222.999999 | 225.000003 | 150.000000 | 0.000002 | 26.999997 | 255.000003 | 216.000001 | 253.999997 | 76.000002 | 5.151848e-07 | -0.000001 | 0.000001 | 0.000004 | 0.000002 | 3.101954e-07 | -0.000002 | -2.671779e-07 | 5.450883e-08 | -1.799488e-07 | -2.672337e-07 | 3.815783e-07 | 0.000002 | -8.765240e-07 | 0.000002 | 0.000005 | -0.000001 | 0.000003 | 169.000001 | 229.000002 | 230.000000 | 1.200000e+02 | -0.000004 | 5.999993 | 255.000003 | 218.000002 | 250.999996 | 59.000000 | 0.000001 | 7.423447e-07 | 1.327465e-07 | 0.000005 | 0.000002 | 3.281200e-07 | -0.000002 | -1.307099e-07 | 3.196277e-07 | 5.418402e-08 | -2.917852e-07 | 7.308653e-07 | -0.000002 | 0.000002 | -5.223541e-09 | 0.000004 | 0.000005 | -1.976124e-07 | 138.000000 | 233.999999 | 228.999997 | 94.999998 | 0.000001 | 0.000002 | 255.000000 | 221.000001 | 221.999998 | 63.000001 | 0.000002 | 0.000005 | 4.826824e-07 | -0.000005 | 0.000002 | 3.892250e-07 | 6.972123e-07 | -2.291508e-07 | -2.135472e-07 | -4.984975e-07 | -8.258425e-07 | 0.000001 | -5.332055e-07 | 5.139264e-07 | -0.000002 | -0.000004 | -0.000002 | -0.000003 | 146.000000 | 232.000000 | 232.000002 | 96.000000 | -0.000004 | -0.000006 | 242.000001 | 226.999999 | 222.000000 | 6.300000e+01 | -0.000005 | -4.653743e-07 | -0.000005 | 0.000002 | -0.000002 | 0.000001 | -7.986391e-07 | -4.286650e-07 | -1.681014e-07 | 6.363970e-07 | -6.975870e-07 | -5.423990e-07 | 0.000001 | 0.000002 | 0.000002 | 0.000005 | 0.000002 | -0.000005 | 119.000000 | 232.000000 | 227.999997 | 75.000000 | -0.000001 | -5.077659e-07 | 208.000000 | 232.000004 | 212.999999 | 2.900000e+01 | 3.296967e-07 | -6.241514e-07 | -0.000005 | 0.000002 | 5.651978e-07 | -5.163183e-07 | 0.000002 | -1.174552e-07 | -5.947502e-08 | -2.880678e-07 | -0.000001 | 5.352320e-07 | 0.000002 | -0.000002 | -0.000002 | -0.000005 | -0.000004 | 0.000001 | 139.000000 | 235.000003 | 214.999998 | 18.000001 | 0.000003 | -0.000008 | 169.000000 | 234.999997 | 211.999998 | 12.000004 | -0.000003 | 0.000004 | -0.000005 | -2.677840e-07 | -3.174695e-07 | -0.000002 | -5.629809e-07 | -3.012789e-07 | -1.447285e-07 | -6.046403e-07 | 1.118729e-07 | -9.513843e-07 | -2.911069e-07 | 2.894738e-08 | 0.000004 | 0.000005 | -3.351748e-07 | -0.000003 | 158.000000 | 236.000003 | 213.999998 | 14.000003 | -0.000002 | -9.949699e-07 | 176.000001 | 234.999997 | 215.000001 | 32.000002 | 0.000003 | 0.000005 | -0.000002 | -0.000001 | -0.000002 | 9.265024e-07 | 0.000002 | 4.011770e-07 | 1.363091e-07 | 3.289930e-07 | -2.624320e-07 | -0.000002 | -0.000002 | -0.000003 | 3.360567e-07 | 0.000004 | 1.282302e-07 | 2.258955e-07 | 152.000000 | 232.000001 | 227.000003 | 66.000000 | -0.000002 | 0.000002 | 203.000000 | 234.999997 | 217.000002 | 35.000002 | -0.000001 | -2.610268e-07 | 0.000003 | -0.000002 | -0.000002 | -0.000002 | 7.528400e-07 | -1.202886e-08 | -1.592361e-07 | 5.095788e-07 | -4.052591e-08 | -2.873870e-07 | 5.500636e-07 | 0.000001 | -0.000002 | 6.275720e-07 | 4.274649e-07 | 0.000001 | 1.450000e+02 | 2.340000e+02 | 229.999998 | 90.000000 | -0.000002 | 0.000002 | 2.080000e+02 | 235.000001 | 212.999995 | 8.999999 | 6.923674e-07 | 0.000001 | -8.325330e-07 | -5.767398e-07 | -1.849482e-07 | 0.000002 | 2.427885e-08 | -2.188508e-07 | 1.379810e-07 | -1.391677e-07 | 1.906360e-07 | 4.654391e-07 | 0.000001 | -0.000001 | -4.551377e-07 | 7.378563e-07 | -0.000002 | 3.950150e-07 | 119.000000 | 236.999998 | 229.000001 | 7.600000e+01 | 0.000003 | -0.000005 | 1.670000e+02 | 241.000000 | 208.000003 | -0.000004 | 4.021377e-07 | 0.000002 | 0.000002 | 0.000001 | 7.859119e-07 | 2.466921e-07 | 1.444405e-07 | 5.295760e-08 | -1.867194e-08 | -2.868018e-07 | -7.280360e-07 | -6.257008e-07 | 0.000001 | 9.323434e-07 | 0.000002 | 8.744090e-07 | 0.000002 | 0.000005 | 76.000002 | 239.000004 | 218.999999 | 19.000002 | 0.000005 | 8.867231e-07 | 93.000000 | 244.999997 | 206.000001 | 0.000002 | -0.000002 | 0.000001 | -0.000001 | 3.297868e-07 | 7.765040e-07 | -1.484936e-08 | 5.969346e-07 | 4.756745e-08 | -9.525822e-08 | -1.043257e-07 | -3.057516e-08 | -7.851076e-07 | -8.448512e-07 | 0.000002 | -0.000001 | 8.809801e-07 | 1.723858e-07 | 0.000001 | 4.900000e+01 | 239.000004 | 203.000003 | -0.000001 | -0.000001 | 8.076585e-07 | 31.000002 | 238.000000 | 205.000002 | -1.852046e-07 | 6.976350e-08 | 0.000002 | -0.000002 | -0.000001 | 0.000002 | 5.335659e-07 | -5.266864e-07 | 8.280237e-08 | 3.033599e-08 | 5.283691e-08 | 1.380712e-07 | 1.159922e-07 | -0.000001 | -0.000002 | 0.000003 | -3.723668e-07 | -0.000002 | 7.009453e-07 | 40.000001 | 2.390000e+02 | 191.000002 | -0.000002 | -0.000001 | 0.000002 | 18.000001 | 228.000000 | 2.010000e+02 | 0.000002 | -0.000002 | -0.000001 | 2.139088e-07 | 0.000002 | -5.421757e-07 | 6.163737e-07 | -5.578727e-08 | 4.793912e-09 | -4.510589e-09 | -9.451520e-09 | -1.198971e-07 | -2.364927e-08 | -9.384266e-07 | -8.592667e-07 | 0.000002 | -7.577064e-07 | -0.000002 | 0.000001 | 29.000000 | 245.999998 | 198.999995 | 0.000002 | -0.000003 | 0.000001 | 9.000003 | 239.000002 | 219.999997 | -0.000002 | -0.000001 | 0.000002 | -0.000002 | -0.000002 | 4.965935e-07 | -2.344399e-07 | -1.488622e-08 | 1.608706e-08 | -3.893886e-11 | -3.305654e-09 | -5.438611e-09 | -4.320514e-08 | -2.151095e-07 | 3.743793e-07 | -6.565379e-07 | 6.560168e-08 | 2.924545e-07 | -2.304249e-08 | 9.522988e-07 | 1.600000e+02 | 1.340000e+02 | -3.102456e-07 | 8.776177e-07 | -0.000002 | 6.155732e-07 | 1.380000e+02 | 128.000002 | 3.771737e-07 | 5.207580e-07 | -1.732680e-07 | -6.984834e-07 | -2.398092e-07 | 2.374313e-07 | -4.985816e-08 | -2.682765e-10 | -2.551856e-09 | 1 |